Back before COVID-19, Chicago Booth’s Sanjog Misra was on vacation in Italy with his son, who has a severe nut allergy. They were at a restaurant and couldn’t read the menu, so Misra opened an app that translates Italian to English, and pointed his phone at the menu to find out if one of the dishes was peanut free. The app said it was.

But as he prepared to order, Misra had a thought: Since the app was powered by machine learning, how much could he trust its response? The app didn’t indicate if the conclusion was 99 percent certain to be correct, or 80 percent certain, or just 51 percent. If the app was wrong, the consequences could be dire for his son.

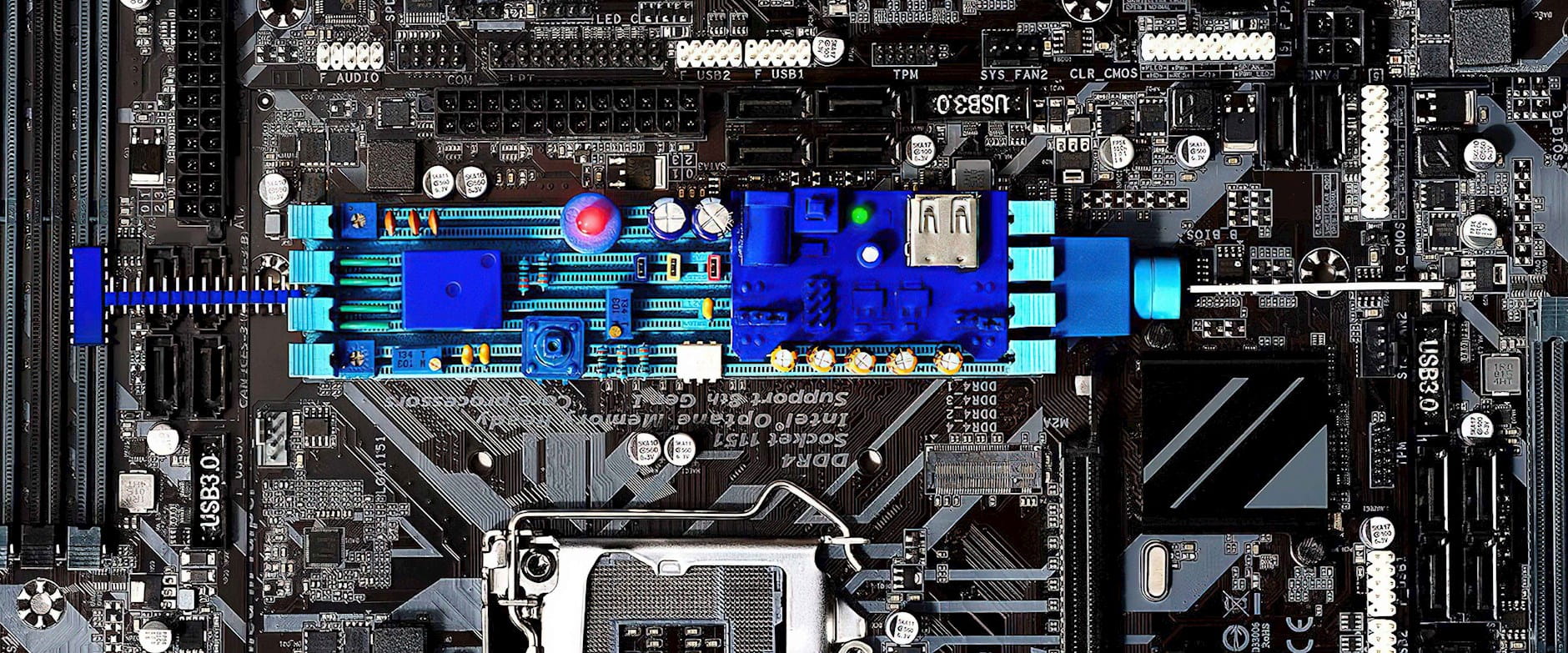

Machine learning is increasingly ubiquitous. It’s inside your Amazon Alexa. It directs self-driving cars. It makes medical decisions and diagnoses. In the past few years, machine-learning methods have come to dominate data analysis in academia and industry. Some teachers are using ML to read students’ assignments and grade homework. There is evidence that machine learning outperforms dermatologists at diagnosing skin cancer. Researchers have used ML to mine research papers for information that could help speed the development of a COVID-19 vaccine.

They’re also using ML to predict the shapes of proteins, an important factor in drug development. “All of our work begins in a computer, where we run hundreds of millions of simulations. That’s where machine learning helps us find things quickly,” says Ian Haydon, the scientific communications manager for the Institute for Protein Design at the University of Washington. Some scientists there are developing vaccines, and others are trying to develop a drug compound that would stop the novel coronavirus from replicating. This involves finding proteins that, due to their shape, can bind to a coronavirus molecule and shut it down. Technology can be used to test thousands upon thousands of hypotheses about what might stop the virus. “The beauty of ML is it can detect patterns, figure out connections on its own that you didn’t tell it to look for,” says Haydon. “It can be a very useful tool to get over your own biases.”

ML allows researchers to work with large amounts of complex data and use these data to tackle new questions. But like any tool, it can be used wisely or badly, and it’s imperfect in a particular way. The answers that ML generates all involve some degree of uncertainty, which affects (or should affect) how confident people can be in the results. You might trust an ML algorithm to predict the weather, recognizing that in most cases an inaccurate prediction wouldn’t be a tragedy. But would you trust a different ML algorithm to drive you to the airport or to fly a plane? You might trust your doctor if she recommends a vaccine, and at this point, any vaccine or COVID-19-related drug would need to go through testing and clinical trials. But would you trust a vaccine if it were, one day, recommended by an ML algorithm rather than having been subjected to long trials? Would you want to know more about the probabilities involved, or get a confidence interval of some kind?

The implications are increasingly important as people assign ML more and more-varied tasks. Researchers are seeking to understand the calculations better as they advance the field and find the limits of what machine learning can and can’t—indeed shouldn’t—do. “Machine learning can’t be applied simplemindedly,” says Booth’s Tetsuya Kaji, an econometrician and statistician.

Finding patterns, making predictions

ML is in some sense just statistics with a different name—but many people see it as a distinct branch that grew out of an effort to realize artificial intelligence, the study of getting computers to do tasks that humans have traditionally performed. While the human brain is special and can make sense of the world in ways computers cannot—at least not yet—computers can still do a lot, including reason. And with ML, machines can learn from examples instead of specific human instructions.

Powered by models borrowed from the field of statistics, ML can analyze data, use them to make predictions, and from there, learn to make even better ones. Thus, people use it to translate information, classify photos, and execute other complex tasks.

Statistics is, at its core, a scientific way of making decisions using data. What’s the probability that something is likely to happen? You want to know this when deciding whether to, say, invest money, step into a self-driving car, or be administered a new vaccine.

Researchers are trying, with theory and data, to measure the probability of uncertainty—in much the same way so many of us measure temperature with a thermometer.

An ML algorithm helps by finding patterns in data. Many times, an algorithm receives example inputs and desired outputs, then learns to map inputs to outputs. Other times, the algorithm doesn’t receive data that are labeled, but has to find its own structure.

ML can find patterns in satellite images, restaurant reviews, earnings calls, and more. It “can deal with unconventional data that is too high-dimensional for standard estimation methods, including image and language information that we conventionally had not even thought of as data we can work with, let alone include in a regression,” write Booth’s Sendhil Mullainathan and Stanford’s Jann Spiess, whose research points to many interesting prediction problems in economics for which ML is useful. “Machine learning provides a powerful tool to hear, more clearly than ever, what the data have to say.”

Booth’s Dacheng Xiu uses ML to predict financial-market movements, by mining market data alongside other sources including text from news reports. Harvard’s Zheng Tracy Ke, Xiu, and Yale’s Bryan T. Kelly wrote a model that essentially automatically generates a dictionary of relevant words frequently associated with positive or negative returns. They analyzed more than 22 million articles published from 1989 to 2017 by Dow Jones Newswires, screening for the words; then isolated and weighted terms most likely to predict a stock’s future price; and finally gave articles sentiment scores. (For more, read “Text-Reading Machines Can Predict Share Prices.”)

What else data patterns can reveal

Some prediction problems are harder than others, however—and this is where uncertainty can creep in. Say a portfolio manager at a small hedge fund wants to know whether the S&P 500 will go up tomorrow. ML predicts this by looking for factors that matter, identifying patterns in data. Algorithms are trained using vast quantities of data and can learn to anticipate market movements. But it’s one thing to answer this question when only the small fund is planning to change its usual trading pattern. If a big fund makes a trade or changes its behavior in a way that has the potential to move the market, this will complicate the picture and any predictions about market direction.

Besides prediction, ML can tackle questions having to do with inference. Think of a typical housing market, says Booth’s Max Farrell. If there’s a house you’re interested in buying, how should you come up with a reasonable price to offer? A real-estate agent often comes up with a number, a prediction, but an ML algorithm can do that too, by finding patterns in real-estate data. If the house you like is bigger than average, it’s probably also more expensive—your realtor may say that a rule of thumb to follow in your area is that for every 1,000 square feet in a house, the price goes up $200,000. But how sure are you about that correlation? That’s a question of inference, and ML can address it.

Some researchers do this by creating parameters, essentially establishing the lines of a box within which the answer is likely to be. Booth’s Panos Toulis has been working with astrophysicists to detect exoplanets, which orbit stars outside of our solar system. A planet orbiting a star can be detected as a slight oscillation in data collected through spectrographs attached to telescopes, but is any given oscillation a sign of an exoplanet, or just noise? If researchers incorrectly infer there is a planet, NASA might waste billions of dollars designing and building equipment to study it. It would be helpful to know the level of uncertainty involved before committing substantial funds.

Toulis proposes a method to ascertain this by eliminating unnecessary assumptions. He doesn’t like speculating: “If your data don’t have enough power to speak, they shouldn’t speak,” he says. His method is, very broadly, almost like tuning an instrument or finding a station on a radio—you look for the limits of a few variables beyond which the data look implausible. This approach is more computationally intensive but generally requires fewer assumptions than typical approaches.

He applied the same method to the question of the prevalence of COVID-19 in the population. (See “What percentage of the population has contracted COVID-19?” Fall 2020 and online.) Looking at studies conducted in Santa Clara, California, and New York, he applied his method to test whether the data were consistent with any possible hypothesis of unknown disease prevalence. Doing this, he demonstrated that the traditional methods are usually “overconfident,” as they focus on what is most probable, and ignore less-probable possibilities, which sometimes are also consistent with the available data.

Identifying causal effects

But establishing a correlation is, famously, not the same as pinning down a causal relationship. Think again about house prices. Imagine that your real-estate agent’s rule of thumb is valid: that in your area, for every 1,000 square feet in a house, the price goes up $200,000. If you add a 1,000 sq. ft. addition to a house, will that push up the price by $200,000? Even if it does, did the extra square footage push up the house price in itself, or was it the additional bathrooms and bedrooms? Causal inference is about that leap, moving from a pattern you know is there to one that’s more causally related.

The gold standard in causal questions is a controlled study. To investigate what a drug does in the body, medical researchers conduct trials, assigning a drug to some randomly selected patients while giving others a placebo.

But real life can be messier, and such controlled trials aren’t always possible. Policy issues are an example: consider the US programs of Medicare and Social Security. Some officials have proposed further raising the retirement age (scheduled to increase to 67 by 2022) to avoid future deficits. Could ML predict the effects with a high degree of certainty?

To approach such questions, says Booth’s Kaji, we need to be able to predict how the elderly will change their behavior under the new policy, which is a causal question. Many studies have documented that retired people decumulate their wealth at a puzzlingly low rate. Is that because they want to leave money to heirs? Or are they uncertain about how long they’ll live, so are trying not to be caught with less money than they need? Or are they concerned about the rising cost of medical expenses? The actual reason would be a combination of many factors, including these, but without knowing it, it’s impossible to effectively predict what the new policy would bring about. Kaji and his coresearchers, NYU’s Elena Manresa and University of Chicago Harris School of Public Policy’s Guillaume Pouliot, have applied a method, called an adversarial estimation, to infer the parameters of the economic model that describes the elderly’s behavior.

The method is inspired by the recent development in ML that gave computers the ability to generate realistic images that they have not seen before, he says. When computers can search for a way to generate realistic data as complicated as images, they can also be used to search for an economic model that generates realistic data as complicated as the elderly’s saving decisions. (For more on how the method works, see “Can automated art forgers become economists?” above.)

Acknowledging the uncertainty

In all of this, the potential inaccuracy of ML is crucial. Booth’s Misra kept that in mind when he used it to translate the menu, and it was up to him to decide whether to override the app’s conclusions. But if an ML algorithm is tasked with driving a car and screws up, it can cause an accident. If it mispredicts the effects of a policy change, people could be financially stressed, or the policy could backfire in some other way. If ML misidentifies the structure of a protein in the search for a treatment for COVID-19, lives could be lost.

There are two primary ways ML can make mistakes. First, a model that’s created can fit an existing data set perfectly but fail to generalize well to other data. A model trying to classify images may be trained on a data set of 10,000 images and learn to classify images in that data set with 99 percent accuracy—but then perform only half as well when asked to analyze a different set of images.

A second problem is overconfidence. ML can look at a data set and provide an answer as to whether, say, a given image is of a dog. However, it won’t convey how certain it is of its answer, which is crucial. If a person driving at night has trouble seeing, she may slow down to read a sign, but a machine not registering uncertainty will just plow ahead. If the machine’s confidence interval is plus or minus 1 percent, that’s one thing, and a passenger would need to feel comfortable with that amount of risk. If it’s plus or minus 75 percent, getting in that car at night would be foolhardy and dangerous. “Imagine if it says 80 percent confident that this is nut free and 20 percent that it has nuts. That would be nice rather than hearing it’s nut free,” says Misra. “That’s the most dangerous part, not accounting for uncertainty.”

We often base our decisions on predictions of future outcomes that never come with absolute certainty. If ML is a black box that outputs a number, that number is one in a range of values within which the truth is most likely contained. To improve machine-assisted decision-making in real life, statisticians are trying, with theory and data, to quantify the uncertainty of ML predictions. They measure uncertainty with probability in much the same way we measure temperature with a thermometer, Booth’s Veronika Ročková explains. How this is done depends on the type of ML method involved, of which there are a variety, including decision trees, random forests, deep neural networks, Bayesian additive regression trees (BART), and the least absolute shrinkage and selection operator (LASSO). (While these sound complicated, and can be, decision trees are essentially detailed flowcharts that start with a root, create branches, and end with leaves.)

If we understand why a black-box method works, we can trust it more with our decisions, explains Ročková, one of the researchers trying to narrow the gap between what’s done in practice and what’s known in theory. Booth’s Christian B. Hansen, often working with MIT’s Victor Chernozhukov, was one of the first to show how LASSO could be used to solve questions related to inference and causal inference, giving people more confidence to use and trust it. BART is a widely used ML method, available as free software, and Ročková’s research was the first to show theoretically why it works. Her research on uncertainty quantification for Bayesian ML won her a prestigious National Science Foundation award for early-career researchers.

ML, she says, is “a wonderful toolbox, but for people to feel safe using it, we need to better understand its strengths and limitations. For example, there have been studies where ML was shown to suffer from a lack of reproducibility. There is still a long way to go before we can delegate our decisions entirely to machine intelligence.”

Researchers are also testing their theories empirically. Chicago Booth’s Max Farrell, Tengyuan Liang, and Misra—in a paper about deep learning, a subset of ML that gives a particularly precise picture of even complex data—theoretically quantified the uncertainty involved in a predictive problem. Studying deep neural networks, they used deep-learning methods to explain how it works.

To illustrate their findings, they compared their theoretical results with an actual corporate decision. A large US consumer products company sent out catalogs to boost sales. When it sent out 200,000 catalogs, an average of 6 percent of people made a purchase within three months and spent an average of $118 each. The researchers compared these results with those of eight deep-learning models and evaluated how close each came to predicting what actually happened.

“We have a truth out there, how people behave. We want to approximate people’s behaviors,” says Misra. “The first question is whether the deep-learning model can approximate the truth. The second is, how close is the approximation?” They measured closeness by determining the smallest number of data needed to produce the right answer, within a margin of error.

How many data did they need to arrive at an answer that is, with reasonable certainty, correct? The answer depends on what a company is trying to do and find out, but the researchers provide theoretical guidance. “We’re confident now that the amount of data we used in that application is enough,” says Farrell. “There’s still uncertainty, but we’re happy with what we found.” (For more, read “How (In)accurate Is Machine Learning?”) The results, the researchers assert, will help companies compare and evaluate ML strategies they may want to use in their various decisions.

How close is too close?

Ultimately, ML is a tool that supports decision-making. Part of what makes it complicated to assess the uncertainty involved in ML is that the line is blurring between who or what is actually doing individual tasks, and how those tasks contribute to the final decision.

Many nonmathematical tasks inform a decision. Think about the search for a vaccine, which involves many separate tasks. Not long ago, researchers hunting for relevant information in scientific literature might have read it themselves. Many still do, but some have assigned ML this task. Similarly, ML can identify protein shapes of interest. Thanks to the complexity of the human body, there’s currently no substitute for testing a vaccine with a clinical trial. But ML may one day be able to comb through data from all clinical studies ever conducted and use them to predict health outcomes, potentially accelerating the clinical-trial process. “You’re running up against the boundary of how people think about using statistics to make decisions,” says Farrell. “Machine learning may be moving that boundary in ways we’re not 100 percent sure of. By changing the tools available, we’re changing how those decisions can get made.”

There is uncertainty involved in every task. When reading through thousands of scientific papers for relevant information, is ML overlooking anything important? When identifying protein shapes, is ML missing any? While the study of ML makes it increasingly accurate, every time ML is assigned a task it hasn’t done before, this introduces new possibilities for uncertainty.

Moreover, as ML moves closer and closer to delivering accurate answers, many questions in the field have to do with whether ML is too accurate. Privacy advocates, among others, worry that ML could be so effective at finding patterns that it could discover information we don’t want to share, and use that to help companies discriminate, even accidentally. Say a company can tell from cell-phone data the angle at which a phone is being carried, and that could be a proxy for gender (as many men keep phones in their pockets while women carry phones in purses). Could it then use information about gender to perhaps charge men and women different prices? In 2019, a developer noticed that the credit limit offered him by the Goldman Sachs–issued Apple Card was far higher than that offered his wife, with whom he filed joint tax returns and shared finances. In a tweet, he called the Apple Card sexist and suspected a biased algorithm. The New York State Department of Financial Services opened an inquiry.

Is it possible to prove mathematically what ML can and cannot learn? And if a business wants customers to know it’s behaving ethically, and to trust it, how can it convince them it’s unable to use ML to violate their privacy? These are issues that companies are trying to sort out before regulators step in and write rules for them.

It’s also an issue that straddles mathematical and social realms—and complicates the bigger question about ML accuracy. Aside from how close ML is getting to delivering solid answers, how close do we want it to get? That’s a subjective question for which there can be no entirely accurate answer.

In the case of Misra, who was in a restaurant in Italy, wondering whether to trust his son’s health to a translation app, what he wanted above all was accurate information. And there were certain words he knew that matched the ML translation, which pushed him toward trusting it. “We did end up using the app, and it was fantastic,” he says. In that restaurant, and others during their travels, he and his son were able to translate menus, write their concerns in Italian to make sure the staff understood, and avoid an allergic reaction. “We wouldn’t have been able to function, in terms of making informed choices about food, as well as we did without the app,” says Misra.

In the moment, he recognized and trusted ML. But as ML becomes increasingly pervasive, many people may not even recognize that ML is behind the application they’re using. Unlike Misra, they won’t make a conscious decision about whether or not to trust it. They simply will.

- Alexandre Belloni, Daniel L. Chen, Victor Chernozhukov, and Christopher B. Hansen, “Sparse Models and Methods for Optimal Instruments with an Application to Eminent Domain,” Econometrica, November 2012.

- Max Farrell, Tengyuan Liang, and Sanjog Misra, “Deep Neural Networks for Estimation and Inference,” Econometrica, forthcoming.

- Tetsuya Kaji, Elena Manresa, and Guillaume Pouliot, “An Adversarial Approach to Structural Estimation,” Working paper, July 2020.

- Zheng Tracy Ke, Bryan T. Kelly, and Dacheng Xiu, “Predicting Returns with Text Data,” NBER working paper, August 2019.

- Sendhil Mullainathan and Jann Spiess, “Machine Learning: An Applied Econometric Approach,” Journal of Economic Perspectives, May 2017.

- Veronika Ročková and Stephanie van der Pas, “Posterior Concentration for Bayesian Regression Trees and Forests,” Working paper, June 2019.

- Panos Toulis, “Estimation of COVID-19 Prevalence from Serology Tests: A Partial Identification Approach,” Journal of Econometrics, forthcoming.

Your Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.