Eight ways to improve public health

- By

- January 28, 2014

- CBR - Public Policy

Eight ways to improve public health

One of the biggest threats to global health is not the cost of medical care or the challenge of an aging population. It’s the flu—a pandemic-in-waiting that can go easily undetected.

Back in March 2009, one of the first signs of H1N1 came when clinics in Mexico City noticed a surge in influenza-like cases that the health authorities assumed was “late season flu.” It took more than a month for US and Mexican health authorities to recognize that they were dealing with a deadly strain of the Influenza A virus, a descendant of the very strain that had caused the 1918 flu pandemic, which killed tens of millions of people across the world. Within nine months of those initial cases in Mexico, H1N1 had claimed more than 6,250 lives, and infected more than half a million people.

It was a powerful reminder of the need for public-health officials to act more decisively in the face of pandemics. In June 2013, the World Health Organization (WHO) proposed a new global pandemic alert system, aimed at making sure governments are prepared to contain potential outbreaks.

Mathematical models could help. So could Google. This idea, proposed by two academic researchers, represents something rarely seen in recent debates about health care: empirical solutions.

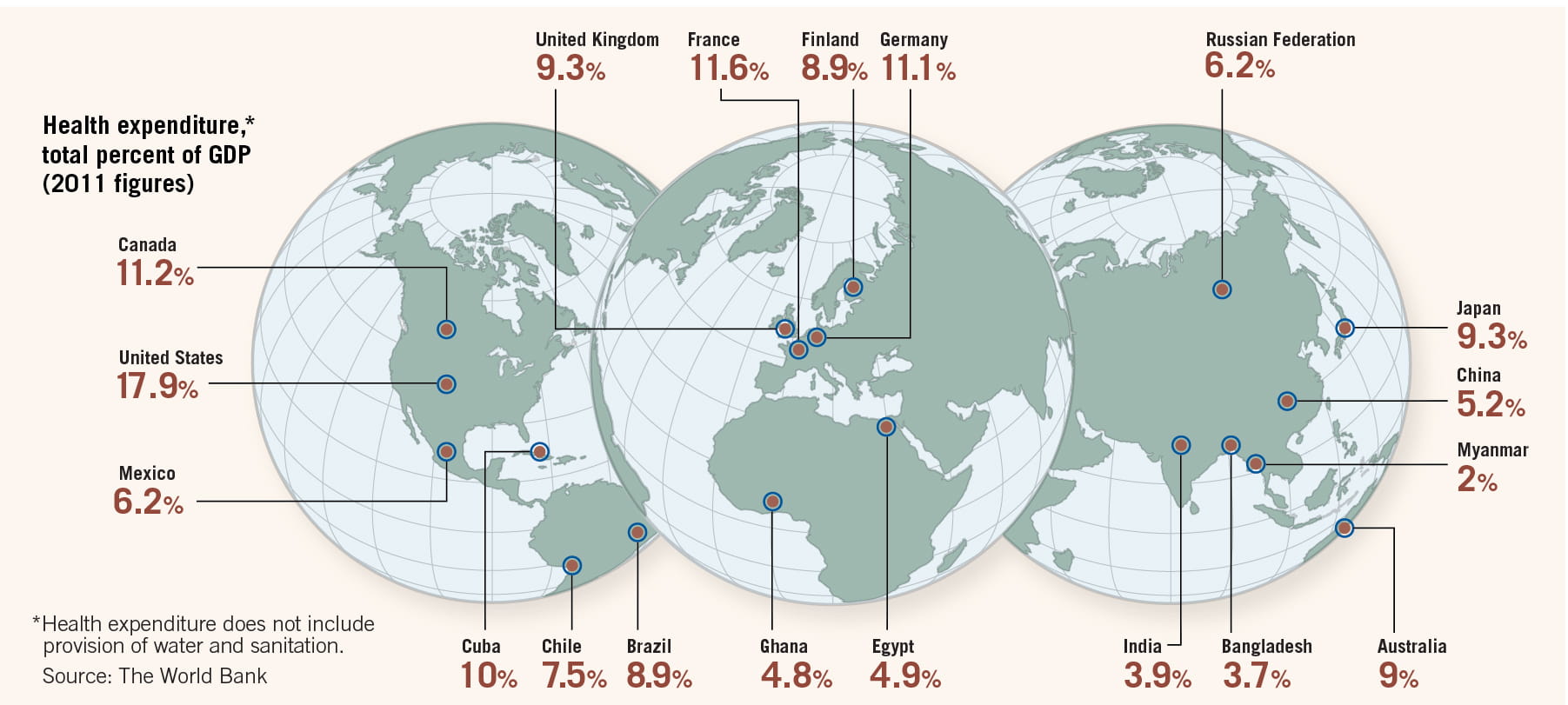

Aging populations in developed countries are pushing up costs to treat chronic illnesses. Expensive new drugs and medical devices are raising questions about who should pay for them, and how much. Worldwide, health-care costs accounted for more than 10% of global gross domestic product in 2011, according to the World Bank. Costs were particularly high in some developed countries—the United States for one, where health care accounted for 18% of GDP. These costs have created political discord. The US 2010 Affordable Care Act, popularly known as Obamacare, attempts to control costs, expand insurance coverage, and improve the health-care delivery system—but has been mired in controversy.

Against this backdrop, empirical research offers steps away from politics and toward better public health. With the benefit of data and statistics, researchers suggest that epidemiologists could contain lethal outbreaks. Doctors could learn how to better serve patients. Organs could be more effectively distributed to people awaiting transplants. Researchers at Chicago Booth offer a number of recommendations for meaningful reform.

In industrialized countries, the flu is often treated as a minor inconvenience, little more than an excuse to take a day off work. But influenza is a major killer, causing severe illness in 3 million–5 million people worldwide each year, and killing 250,000–500,000, according to the WHO. It ties pneumonia as the eighth-leading cause of death among people 65 and older in the US, as ranked by the Centers for Disease Control and Prevetion (CDC). Fighting the flu is big business, too: the CDC estimates drug companies manufactured 135 milion–139 million doses of influenza vaccine for the current flu season, which began in October 2013.

Public-health officials are especially concerned with monitoring and combating large, global outbreaks of influenza such as H1N1. But researchers are developing a new model for tracking flu epidemics that may help, being faster and more accurate than traditional methods.

The conventional model used by epidemiologists dates to the early 20th century, to a 1927 framework developed to model the spread of the plague in 17th-century London, and an 1865 outbreak of cholera there. This mathematical model was later updated to capture the latency period of the flu, when a person is infected but can’t yet transmit the virus. It became known as SEIR, short for Susceptible, Exposed, Infectious, Recovered—the four categories of people that define an outbreak. SEIR was later updated to capture the specific qualities of influenza.

Moreover, currently, to track the spread of the flu, the CDC surveys 2,400 sites in 122 cities, in all 50 US states, checking reports from doctors, clinics, test laboratories, and public-health departments. They plug the results into a conventional SEIR model.

But in the internet age, this survey and SEIR may be outdated in favor of Google and a more robust mathematical model.

A 2009 study published in Nature identifies a set of search terms that form the best predictions of the CDC’s own flu counts. Top queries included types of flu complications, cold and flu remedies, antibiotic medicines, and specific flu symptoms. The search terms are the foundation of an algorithm called Google Flu Trends, which consistently predicts the CDC’s results one to two weeks ahead of time.

SEIR itself could be improved upon, too. Although the standard model is simple and intuitive, it can’t incorporate important changes that occur during the course of an epidemic, such as the intervention of public-health officials or vaccination patterns. Nicholas Polson, Robert Law Jr. Professor of Econometrics and Statistics at Chicago Booth, along with Hedibert F. Lopes, a former associate professor at Chicago Booth, and Vanja Dukic of the University of Colorado at Boulder, have turned the traditional flu-tracking model into a more flexible model that can incorporate new information. They use a state-space representation, a type of mathematical framework that uses vectors to express variables that change over time.

Polson, Lopes, and Dukic test their state-space model with the Google Flu Trends data for two US flu seasons, 2003–04 and 2008–09, as well as with data from New Zealand for 2008–09.

Because it can adjust to shifting parameters, the state-space model more accurately represents the actual patterns of illness. The research could give a head start to public-health officials, who can more quickly provide information about the spread of the flu, perhaps by launching a media campaign that urges people to get vaccinated. Intervening sooner could save lives.

Last year the US Food and Drug Administration approved 39 new drugs, a 16-year high. Many people are interested in seeing new drugs adopted, as patients want access to the latest treatments, and drug companies are eager for doctors to adopt their newest drugs because those tend to be the most profitable offerings. But doctors are sometimes slow to prescribe a new drug, preferring to stick with a tried-and-true medication rather than take a chance on an unknown course of treatment.

How can doctors be persuaded to try a new drug? Pradeep K. Chintagunta, Joseph T. and Bernice S. Lewis Distinguished Service Professor of Marketing at Chicago Booth, takes on that question.

Chintagunta is interested in two ways that doctors learn about the effectiveness of new medications: through drug companies’ marketing activities and through their own observations after prescribing the drugs to their patients. He wanted to find out how these two methods interact.

“From a public-policy perspective, there’s a lot of concern about marketing activities directed at physicians,” Chintagunta says. “We’d like to know what exactly is happening.”

Experimenting teaches doctors about the effectiveness and side effects of new drugs, knowledge that benefits both doctor and patient. But if doctors believe drug companies will soon be giving them information and free samples, two opposing shifts in behavior take place. Doctors adopt a new medication more quickly as they learn from the drugmakers, but doctors also reduce their own experimentation, which can slow adoption of the new drug.

Chintagunta, with Ronald Goettler of the University of Rochester and Minki Kim of the Korea Advanced Institute of Science and Technology, examines how these prescription trends play out in the months after the introduction of two erectile-dysfunction drugs, Levitra and Cialis. ED drugs have become a huge market: in 2006, global sales surpassed $3 billion. By 2012, global sales had grown to an estimated $4.3 billion.

The researchers chose these drugs because when a patient is receiving a lifestyle medication rather than a treatment for a life-threatening disease, the risk of malpractice lawsuits is lower, and the willingness to experiment is higher. When Levitra and Cialis were launched in 2003, Viagra had held a monopoly on the market for more than five years.

The researchers use data from approximately 9,900 prescription records written by 957 doctors from August 2003 to May 2004, provided by ImpactRx, a consulting firm that specializes in the pharmaceutical industry. The team looked at physicians who prescribed Viagra at least once before the Levitra and Cialis launches, and they recorded 16,629 visits by drug companies’ sales forces to doctors’ offices.

The study uses a dynamic Bayesian learning model, which predicts how physicians will change their behavior as they learn more about a new drug’s effectiveness through patient feedback or marketing visits. Marketing certainly works: the study finds that if a Levitra sales rep has visited a doctor, 46% of doctors will prescribe the drug to the first erectile-dysfunction patient they see after the drug’s launch. In the absence of a sales call, only 14% of doctors prescribe the new drug.

The researchers find most doctors initially perceive the new drugs as inferior to Viagra, despite the fact that all three drugs have similar effectiveness rates. Independent studies show Cialis to be slightly more effective than Viagra, and the average efficacy of Levitra is slightly lower than Viagra’s. Yet over nine months, by April 2004, Cialis became the most prescribed drug to treat erectile dysfunction, with Viagra in second place.

Chintagunta and his coauthors learn that doctors are less likely to experiment with new drugs if they believe pharmaceutical companies will soon be sending sales reps to their offices. Physicians face a cost-benefit trade off: the risk of prescribing a less-effective drug must be weighed against the value of the information physicians receive about a new drug’s efficacy when they prescribe it and observe the treatment outcome. When doctors expect to soon learn more about the new treatment directly from sales reps, the incentive to obtain information through experimentation declines.

To maximize prescription rates for new medications, they conclude, drug companies should avoid notifying doctors of a big marketing push for a new drug. That way, doctors will continue to experiment, but they also will benefit from the increased information provided by the pharmaceutical-makers.

“The sooner physicians figure out if a drug works better, the more patients they can help,” says Chintagunta, who, in ongoing research, seeks to move beyond evaluating drugs by their efficacy to analyzing how well doctors match their prescription recommendations to the needs of a particular patient.

Much of the public debate about health care centers around costs. In the US, a key issue has been the cost for individuals of insurance and medical procedures. In the UK, the National Health Service struggles to manage its limited budget. Many industrialized countries face the looming problem of how to pay for the health-care needs of an aging population. But what’s an appropriate amount to pay for medical care?

This question leads Matthew J. Notowidigdo, Neubauer Family Assistant Professor of Economics at Chicago Booth, to consider how the marginal utility of consumption—in other words, the incremental gain from buying more of a product or service—varies with health.

Researchers have tried this exercise before. In the 1990s, Vanderbilt’s W. Kip Viscusi (and various coauthors) asked people how much they would have to be paid to hypothetically be exposed to specific health risks, but that series of papers proved inconclusive. More often, economists fail to consider whether the marginal utility of consumption varies with health. Even research related to long-term-care insurance, annuities, or other products linked to life expectancy typically doesn’t factor in consumers’ health.

Notowidigdo, with Amy Finkelstein of MIT and Erzo F.P. Luttmer of Dartmouth College, seeks to estimate whether people who feel good get more satisfaction from their consumption than those with chronic illnesses.

Intuitively, the researchers say, the results could go in either direction. People may find that as their health declines, they enjoy travel less, but they may find they’re getting more of a personal benefit from buying prepared meals or paying for someone to help them with daily tasks.

The authors use data for 1992 to 2004 from the Health and Retirement Study, conducted by the Institute for Social Research at the University of Michigan. Every two years, the study surveys more than 26,000 Americans age 50 and older, collecting information about work, income, health insurance, disability, and other factors. The study also includes in-depth interviews with participants.

Notowidigdo and his coauthors use a baseline sample of 11,514 people whose health status varies from good to poor. They are especially interested in those who report a decline in health over the course of the study.

They group people in this sample according to household income. (Household income is in this case the average of total annual household income, plus 5% of financial wealth, to account for the fact that elderly people may be spending down their financial savings.) They look only at people who are not currently working, so that the researchers know that the patient’s income will be unaffected by a newly developed chronic illness. They also limit the sample to people who have health insurance, to minimize adjustments to their model for out-of-pocket medical expenses.

To classify people as being in poor health, the researchers look at whether the survey participants report having any of seven chronic diseases: hypertension, diabetes, cancer, heart disease, chronic lung disease, stroke, and arthritis.

One challenge for the researchers: there are few datasets that broadly measure consumption. Therefore, as a proxy, they estimate how the additional benefit of income varies with health, using a measure of subjective well-being from the Health and Retirement Study. That study asked participants whether they agree with the statement, “Much of the time during the past week I was happy.” On average, 87% of the respondents in the researchers’ sample said they agree.

Economists can be skeptical of subjective well-being measurements, preferring to analyze not what people say but what they actually do. But the authors say these measurements should still capture the relationship between declining health and the relative benefit of consuming more.

“We can measure how much less happy people are when they experience the onset of a chronic illness,” Notowidigdo says. “If high-income people become a lot less happy, we can infer that the marginal utility (of consumption) goes down.”

Overall, Notowidigdo and his coauthors find, wealth provides greater benefits to the healthy than to the sick. A one-standard-deviation increase in the number of chronic diseases is associated with a 10%–25% decline in the marginal utility of consumption. In other words, the sicker you are, the less happiness you’ll get from spending more. And the higher your income group, the more your happiness level drops when your health worsens.

In general, people with higher incomes tend to be happier. In the Health and Retirement Study, participants making $50,000 annually were five percentage points less likely to say they were unhappy than participants with half that income. But for people suffering from more than one chronic disease, income has a far smaller effect on happiness: it eliminates 75% of the happiness bump. (The average number of chronic diseases for a person in the sample was 1.95.)

The results get to the heart of the US health-insurance coverage debate. While the researchers caution that their estimates are inexact, they suggest that the optimal share of medical expenses reimbursed by health insurance should be lowered 20 to 45 percentage points. Healthy people could get more enjoyment by spending their money on something other than monthly insurance premiums. People who are sick would have to pay a greater share of their medical expenses out of pocket, to compensate for lower premiums, but they wouldn’t derive as much of a benefit from spending it elsewhere anyway. “The appropriate generosity of Medicaid and Medicare is something we know very little about,” Notowidigdo says. (Medicaid and Medicare are the federal health insurance systems for US low-income and senior citizens, respectively.)

Their results could spur further study about whether various chronic diseases have a similar impact on the benefits of consumption. The researchers say they’re curious about the effects that acute illnesses such as heart attacks—rather than the chronic illnesses (such as diabetes and cancer) that they studied—have on spending and happiness. They also want to know whether the same patterns they see among the Health and Retirement Study participants hold true for younger people. And does the amount of time a person spends working impact the benefits of consumption?

Notowidigdo continues to examine the relationship between physical health and financial well-being. With Finkelstein and coauthors, he is analyzing a database of every hospitalization in the state of California over a 10-year period, merging that information with patient credit reports. (The data are stripped of all personally identifying information, such as Social Security numbers.) They compare the impact of the hospitalization on the credit reports of people with health insurance to those who lack insurance. “So far, we see pretty big negative financial consequences for people who don’t have health insurance,” Notowidigdo says.

Despite the billions of dollars spent each year on cancer research, companies have developed few drugs that prevent cancer from occurring, rather than treat it once diagnosed. Among the notable exceptions are Merck’s Gardasil and GlaxoSmithKline’s Cervarix, vaccines introduced in the past decade that can prevent most cases of cervical cancer, one of the most common cancers in women.

Why aren’t there more cancer-prevention drugs? The structure of the patent system discourages private companies from investing in technologies aimed at prevention and treatment of early-stage disease, according to research by Eric Budish, associate professor of economics at Chicago Booth, with Benjamin N. Roin of Harvard and Heidi Williams of MIT. Roin is a patent law professor, and Williams is a health economist.

Patents give companies incentives to innovate, and those incentives are important when the average cost of bringing a new drug to market is $800 million. When a drug is protected by patent, the patent-holder has the exclusive rights to sell that drug. But once a drug loses that protection, it loses that exclusivity, as well as most of its sales to lower-priced generic copies.

Budish and his coauthors argue that patent design worldwide, perhaps inadvertently, is flawed. Governments grant patents for a fixed length of time, commonly twenty years, but firms have legal and strategic incentives to file for patent protection as early as possible, long before they have a commercial product. As a result, inventions that take a long time to bring to market receive much less patent protection than those that come to market quickly.

For cancer drugs, the time it takes for a new product to be commercialized depends largely on the length of its clinical trials. The longer a cancer patient is expected to live, the longer it takes for a clinical trial to demonstrate that a drug improves survival rates. If a new drug is tested on patients that have, on average, six months to live, and the drug is expected to extend life by one month, it doesn’t take long to show whether it works. But suppose a drug company wants to test a hypothetical vaccine to prevent prostate cancer. If the vaccine is given to healthy 20-year-olds in a clinical trial, it will take decades to demonstrate its effectiveness. By then, the patent will have expired.

“Drugs that prolong life for the terminally ill are quick to test and get a long patent life,” Budish says. “Drugs aimed at preventing disease in the first place are slower to test and end up getting less—or, in extreme cases, zero—patent protection.”

Therefore, drug companies have stronger incentives to develop late-stage cancer treatments, even though a cancer-prevention drug or a drug aimed at early-stage cancer might be more beneficial to society. The researchers are concerned that prevention drugs come to market only when the public sector, which doesn’t have to worry about patent protection, is willing to finance a clinical trial. Potentially, there are “missing drugs” that could help cancer patients, but they aren’t being developed because drugmakers lack incentives to create them.

Because the pharmaceutical market is global, the researchers can’t show directly how a different patent system would change patterns of drug innovation. They also face a challenge in demonstrating “missing” investment in research and development. To support their argument, they compile evidence to show which types of cancer treatments are being developed and how they are funded.

Budish and his colleagues make a number of findings. Drugs requiring shorter clinical trials receive far more investment from drugmakers. Also, the longer the clinical trial, the more likely it is to be publicly funded.

They find that research and development can be encouraged by using “surrogate endpoints,” which are valid proxies for mortality that speed up clinical trials. For example, cholesterol and blood pressure are surrogates for heart disease. In a trial, if a drug were shown to lower blood pressure, that could be used as evidence it would also reduce death from heart disease. Surrogate endpoints are already used in clinical trials for some types of cancer, and the researchers find more research and development associated with those cancers, especially for early-stage disease.

The researchers also find that of six cancer-prevention technologies commercialized, the four that had private funding relied on surrogate endpoints, and the remaining two were publicly financed. The new cervical-cancer vaccines use as a surrogate endpoint the incidence of the human papilloma virus, or HPV, which causes cervical cancer.

Budish and his colleagues offer three recommendations to encourage development of cancer-prevention drugs and treatments for early-stage cancers. First, they say the patent system should be reformed to give more protection to drugs that take a long time to bring to market. One possibility, the patent clock could start when a drug is commercialized, rather than when a company first files for a patent.

Second, the researchers say government research and development should be directed toward prevention and treating early-stage disease, since private companies have less incentive to invest in those areas. And third, they say a government could allow more drug approvals to be based on statistically valid surrogate endpoints.

Cancer patients could have much to gain from these recommendations. Clinical trials for drugs that treat blood-related cancers such as leukemia have a long history of using blood cell counts and bone marrow measures as surrogate endpoints. When the researchers compare improvements in five-year survival rates for patients with blood cancers such as leukemia with survival rates for patients with nonblood cancers, between 1973 and 2003, they estimate that if surrogate endpoints had been more widely used, the average patient diagnosed with a nonblood cancer in 2003 would have lived a year longer.

Multiply that by the 890,000 cancer patients diagnosed that year, and the distortions in the patent system resulted in 890,000 years of life lost—and that’s just for the people diagnosed in 2003.

Among lethal cancers, prostate cancer is the most commonly diagnosed cancer among men. One in seven men will be diagnosed with prostate cancer in his lifetime, according to the National Cancer Institute. In the US, the number of estimated new cases this year will exceed 238,000, and nearly 30,000 people will die from the disease.

While men worry about this diagnosis, there are also growing concerns that doctors may be overtreating minimal prostate cancers: tiny, slow-growing tumors that need close monitoring but not intervention. Not only is overtreating such cancers expensive—a robotic surgery system can cost a hospital $2 million to set up—but surgery and radiation can cause serious side effects such as impotence and incontinence. On the other hand, if a larger tumor is missed, the cancer is likely to progress, and the patient’s life may be in jeopardy.

Studies aim to understand when a tumor needs to be treated immediately and when it needs only to be watched—a dilemma described by French researcher Laurent Boccon-Gibod as “identifying the tigers from the pussycats.”

Rodney P. Parker, associate professor of operations management at Chicago Booth, has developed a new model for deciding when prostate tumors need to be surgically treated, collaborating with Arthur Swersey of the Yale School of Management, John Colberg of the Yale Medical School, Ronald Evans of ImpactRx, and Johannes Ledolter of the University of Iowa.

“Distinguishing between the small and insignificant, and the small and significant, cancers is actually very difficult for urologists to do,” says Parker. “What we want to do is provide a tool and a framework for urologists to be able to discern between these.”

Under current protocols, doctors decide whether to treat prostate cancer by using nomograms, prediction tools based on measurements such as the number of cancerous samples found through biopsy and the patient’s level of PSA, a protein made by the prostate gland and found in the blood.

Using computer simulations and probability modeling, the researchers develop a more accurate methodology to distinguish between minimal tumors that do not require surgery, and larger tumors that do. They build a computer model in which the prostate is assumed to be an ellipsoid—a roughly egg-shaped figure—while the tumors are modeled as spheres. Tumors are classified as minimal, small, medium, or large, based on their volume.

For each experiment, the researchers run 10,000 computer simulations, varying the size of the prostate gland and the tumor, as well as the number of needle biopsies performed. In a biopsy, needles are used to collect a small tissue sample from a tumor for analysis. They simulate tests using six, 14, or 20 needles used to biopsy cancers of various sizes. Then they apply Bayes’ theorem, which assigns a probability to an event given measured test probabilities. In other words, if the probability is minimal that the patient’s tumor meets a certain threshold, how likely is it that the test correctly classified that tumor?

Previous research finds that if there’s a greater than 30% chance that the cancer is minimal, and yet the patient does not have surgery, only 7% of minimal tumors would be overtreated—but a full 65% of larger cancers would be overlooked. But if the threshold for surgery is moved up, to cases where there’s a greater than 60% chance that the cancer is minimal, only 15% of significant cancers would have gone without treatment, but 54% of minimal cancers would be overtreated.

Parker and his colleagues produce results that they say doctors could use to improve their nomograms and prediction models. The researchers recommend that doctors use biopsy needles at least 1.5 cm long, rather than 1 cm. Their results show using the longer needles results in fewer minimal cancers being treated, while only half as many small but significant tumors are missed.

The study also demonstrates the benefit of increasing the number of needle biopsies performed. With a 20-needle biopsy, a patient would have, nearly every time, a clear picture of whether his cancer is highly likely to be minimal.

The researchers note that their model has limitations. It uses an idealized prostate shape and spherical tumors, rather than the irregular shapes found in real life. They plan to create a 3D computer simulation that captures the actual anatomy of the prostate gland, and they would like to replace their spherical tumor simulations with models of actual tumors. Their work can help doctors determine the best course of action for treating millions of men with cancer.

“By better discriminating the patients who truly need treatments from those who do not, we reduce the unnecessary treatments, and the repercussions of that are very significant,” says Parker. “We hope that in time, [our model] can be further developed into a diagnostic tool that urologists can use.”

Booth’s Parker is also tackling other life-or-death questions, such as how to improve the procedures for organ donations. Across the US, 94,000 people are waiting for a kidney transplant. As kidneys become available from patients who have died, they are allocated to the most appropriate recipient, based on criteria such as blood type and medical urgency, and how long the person has been waiting for a new kidney. But there is a severe shortage of organs. Essentially, the donor kidneys are rationed, and many people die waiting for a transplant.

Parker and Bariş Ata, professor of operations management at Chicago Booth, realized that almost all research on kidney transplants assumed the supply of kidneys was constrained. From that base, researchers then analyzed the best way to allocate a limited number of kidneys. What if, instead, they looked at how hospitals might increase the number of available organs?

Working with Mazhar Arikan of the University of Kansas and John J. Friedewald of Northwestern University, Ata and Parker analyze detailed information about 76,866 deceased kidney donors and 111,579 actual or potential organ recipients from January 2000 through June 2010. Over this time period, the number of deceased donors stayed relatively flat, while there was a dramatic increase in the number of people added to the waiting list for a donated kidney.

The researchers find the average quality of transplanted kidneys worsened over time, perhaps because of the lengthening national wait list. As the median waiting time grows, patients are increasingly likely to accept lower-quality kidneys.

Unfortunately not every usable kidney is offered for transplant. The trouble is due to quirks in the system for organ donation. The process is run by the United Network of Organ Sharing, or UNOS, a nonprofit regulated by the federal government that arranges for organs to be recovered, tested, packaged, and transported to hospitals for transplant.

UNOS divides the US into 11 regions and 58 “donor service areas,” each served by its own organ procurement organization. When an organ becomes available, it is offered first within its donor service area; more than 70% of kidneys from deceased donors are transplanted locally. If no patient in that area accepts the kidney, it is offered regionally, then nationally.

Wait times for kidney transplants vary dramatically across donor service areas—from less than a year to more than four years, according to one recent study. The longer the wait time, the more likely the kidney is to be harvested.

The problem is that not every kidney is as good as another. A kidney’s quality is measured by an index that captures how likely a recipient’s body is to reject the organ, using factors that include the donor’s height, weight, age, ethnicity, history of hypertension and diabetes, and cause of death.

In donor service areas with shorter wait times, the lower-quality kidneys are less likely to be accepted by a local patient. The problem is that a willing recipient has to be found before the donor goes into the operating room. When the potential recipients in a local area refuse the organ, there isn’t always enough time to then offer them regionally and nationally. Ata and Parker find that in these areas, those kidneys often go unused—even though a patient in another part of the country, facing a long wait time, might be willing to take it.

Ata and Parker point out that in New York, the waiting time for a kidney transplant can exceed two years, while at a donor area in Utah, the wait typically is less than a year. Perhaps unsurprisingly, the lowest-quality organs transplanted in New York are consistently worse than the lowest-quality kidneys available but not procured in the Utah locale, because the New York patients are more willing to accept the lower-quality organs. This mismatch shows that some kidneys in Utah, which New York recipients would be happy to use, are potentially going to waste.

“What if we shared those marginal-quality organs more immediately?” asks Parker.

To improve the situation, the researchers develop a game-theory model: a mathematical means of studying strategic decision-making. In their model, the kidneys are of varying quality, and patients can decide to accept a particular kidney or wait for a better one, with no penalty for turning one down. The model shows that changes to the kidney-allocation policy could maximize the supply of available organs.

These insights could influence the proposals of the federal Kidney Transplantation Committee, which is considering changing howkidneys are allocated across the nation. The researchers find that if the poorest-quality 15% of kidneys were offered more broadly from the start—whether regionally or nationally—56–124 more kidneys would be transplanted every year, possibly extending the lives of patients who would otherwise die waiting.

“If offered regionally, an additional 66 kidneys per year would be procured. And if offered nationally, immediately, an additional 138 kidneys would be procured every year,” says Parker. That may not sound like a huge number, he says, “but it would matter if you’re on that waiting list.”

Just as kidneys are in short supply, so are livers. On a typical day, more than 16,000 people in the US are hoping for a new liver, yet only 6,000 livers are likely to be transplanted per year. For patients with end-stage liver disease, a transplant remains the only effective treatment.

In the late 1990s, frustration with the shortages led more patients to settle for part of a liver from a living donor, rather than wait for someone with a usable liver to die. In 2001, living donors accounted for 10% of all adult liver transplants, although the practice declined some after the highly publicized death of a donor, 57-year-old Mike Hurewitz, at Mount Sinai Hospital in Manhattan.

On average, patients live longer when they receive livers from living donors compared to a deceased donors. However, the procedure continues to raise concerns. Last year, 56-year-old Paul Hawks died on the operating table during a procedure to remove 60% of his liver, intended for his brother-in-law, Tim Wilson. Wilson received the transplant but died less than a year later from advanced liver disease. Today, about 300 partial livers are transplanted each year from living donors, 4% of all liver transplants.

Therefore the question becomes this: at what point in the progression of a patient’s liver disease should a doctor, acting on behalf of a patient, decide to take the risk of accepting a liver from a living donor, rather than wait for a liver from a deceased donor to become available via a long waiting list?

Burhaneddin Sandıkçı, associate professor of operations management at Chicago Booth, investigates this question with the University of Pittsburgh’s Mark S. Roberts, Cindy Bryce, Joyce Chang, Mehmet Demirci, Sepehr N. Proon, and Andrew J. Schaefer. Oguzhan Alagoz of the University of Wisconsin-Madison also contributes to the study.

In work published in 2007, the researchers develop a mathematical model to estimate the ideal timing of a liver transplant from a living donor, based on maximizing the sum of a patient’s survival time before and after the transplant.

The timing is measured by a score called MELD, short for Model for End-Stage Liver Disease. Doctors use MELD to estimate how urgently a patient needs a transplant in the next three months. The scores can range from 6, meaning less ill, to 40, meaning gravely ill. MELD is derived from levels of serum creatinine and bilirubin, two chemical molecules in the blood, as well as INR, a laboratory measure of the clotting tendency of the blood.

The researchers’ model is formulated as a Markov decision process, a mathematical framework that models situations where outcomes are partly random and partly controlled by the decision-maker. For each day a patient waits for a liver, the model compares how long she could expect to survive by accepting an organ from a living donor with how long she would have if she were to postpone the decision by one day, with the hope of receiving a liver from a deceased donor.

The model suggests a patient shouldn’t accept a living-donor transplant until her MELD score reaches a relatively high threshold—of 15, for example—particularly if she has a slowly-progressing liver disease. It also suggests it is better to wait until reaching that relatively higher MELD score if the quality of her living donor’s liver is relatively low, or if the patient lives in an area with shorter wait times for livers from deceased donors.

Sandıkçı compares this to shopping for a house. “If I am not under pressure to find a house, I will take my time to find the best house I can,” he says.

But actual transplant practice doesn’t conform with the predictions of the model. In another study, Sandıkçı and his colleagues use publicly available data from the Organ Procurement and Transplantation Network. They look at patients who were on the UNOS waiting list from January 2002 to October 2008, and also consider the amount of time a sample of 1,587 adults had to wait during that same period before receiving livers from living donors in the US.

The researchers compare the predictions to practice with respect to three categories: the progression of liver disease, the quality of donor organs, and the probability of receiving a deceased-donor liver offer.

Unfortunately, they find that practice is the opposite of what the model predicts. The model predicts that to maximize life expectancy, patients with slowly-progressing liver disease should hold out for the possibility of getting an organ from a deceased donor. The model also predicts that patients who could receive lower-quality living-donor organs, or who had a higher likelihood of receiving a deceased-donor organ through UNOS either because of their geography or blood type would similarly hold out longer before finalizing their transplant decisions. But instead, the data show, patients were quick to accept livers from living donors.

“We were completely surprised to see that,” says Sandıkçı. “Some patients indeed seem to be acting too quickly to get their living-donor transplants despite a slowly-progressing disease, or despite having a good shot at a better-quality deceased-donor liver.”

These transplant decisions appear to have shortened the life expectancy of patients with primary biliary cirrhosis, a particular type of liver disease, by an average of seven months. Some patients lost an estimated 1.4 years of life because they opted too quickly to receive a liver from a living donor. UNOS data indicates that some 60% of patients with MELD scores of 15 or lower received a living-donor organ transplant, although they were at a level of illness considered “too healthy” for a deceased-donor transplant.

MELD 15 is a threshold that UNOS has recently adopted when allocating livers from deceased donors. These livers had been offered in the same manner as kidneys: first to a local donation service area, then regionally, then nationally. Now UNOS prioritizes transplanting deceased-donor livers if the MELD score is 15 or higher. Livers have to be shared regionally if no local candidate on the waiting list meets that threshold for the progression of liver disease.

Sandıkçı and his colleagues suggest the organ transplantation community should adopt similar standards for living-donor liver transplants, as well. “You should not rush to go through the living-donor transplantation, which we see is happening,” he says.

Despite the interest in making health services such as organ transplants more efficient and effective, many health-care systems are less responsive to change. It is particularly hard to import ideas that have worked in other industries, as many people don’t believe hospitals and doctors’ offices are subject to the same market forces as grocery stores or car assembly lines.

After all, the argument goes, when people are making life-and-death decisions for themselves or their families, they may not perform the same kind of cost-benefit analysis that a consumer would when deciding whether to buy a new car or iPhone. When a person is having an emergency, an ambulance may have to take her to the nearest hospital, not to the one she might choose under calmer conditions. In the US, the prices and even the quality of health-care services are extremely opaque, making comparisons difficult. Arcane insurance billing systems add to the confusion.

So goes the conventional wisdom, but Chad Syverson, J. Baum Harris Professor of Economics at Chicago Booth, set out to put this line of thinking to the test by studying hospital productivity, which varies widely across hospitals and geographies. Depending on the market, annual Medicare spending per person ranges from $6,264 to $15,571—yet spending more doesn’t necessarily produce better outcomes for patients.

From his own research on other industries, Syverson knows that almost every sector shows great disparities in productivity. In narrow segments of manufacturing, for instance, he finds in previous work that a company that ranks in the 90th percentile by productivity creates twice as much output from the exact same inputs as a company languishing in the 10th percentile. These differences exist not only from one region of the US to the next but also within specific markets.

A wealth of previous research has found that competitive pressures typically force less-productive businesses to become more efficient, shrink or, in some cases, disappear. Could the same forces influence health care? Working with Amitabh Chandra of Harvard, and Amy Finkelstein and Adam Sacarny of MIT, Syverson looks at hospital productivity in treating heart attacks.

The researchers estimate this productivity by analyzing all Medicare claims for heart attacks in people ages 65 and older—about 3.5 million US patients—from 1993-2007. They examine both the treatments given and their outcomes, based on patient survival rates one year following the heart attack. Cardiovascular disease is the leading cause of death in the US, so the study has clear implications for how hospitals operate.

Syverson and his coauthors look at the relationship between the resources spent on each heart-attack patient and the number of days the patient survives. On average, a hospital administers treatments worth $16,000 in the first 30 days after a heart attack, but the standard deviation is quite large, about $12,000. That means that costs can range from only a few thousand dollars to many times the average level.

To account for the different health characteristics of each patient, the researchers use a set of patient demographics and information about other medical conditions the patients have to adjust their analysis. To verify that they are appropriately estimating productivity, they compare their estimates with observable measures of hospital quality, such as whether the hospital gives patients beta blockers, inexpensive drugs that reduce stress on the heart. These quality measures have been collected by the Center for Medicare & Medicaid Services for about a decade and have been publicly reported since 2005. The researchers find their estimates of hospital productivity correlate with the quality measures.

It turns out that health care is not so different from other industries: more-productive hospitals have higher market shares, measured by the number of patients they treat—and better hospitals are more likely to expand. A 10% increase in a hospital’s productivity is associated with a 30% increase in its market share within its metro area. In five years, that same 10% productivity increase translates into 6% more patients than the hospital would have otherwise.

What’s surprising is that these findings hold true for heart-attack care, a time-sensitive service that might seem at first to be less reactive to market forces than an elective procedure such as a hip replacement. “Somehow more patients go to better hospitals in the end,” he says.

Syverson cautions that it isn’t clear whether competition between hospitals, or some other factor, causes the changes in market share. It could be that competition drives patients to more-productive hospitals, or that hospital features such as better managers separately but concurrently increase both patient demand and the efficiency of health-care delivery.

Early observations indicate that the relationship between hospital productivity and market share is driven more by the correlation between market share and patient survival than by patients avoiding hospitals that are more likely to use more invasive procedures (though both factors matter). In other words, patients and their families seek out hospitals with higher heart-attack survival rates. It isn’t clear how patients gain this information, since it can be difficult to compare the quality of health-care providers. Syverson and his colleagues suggest that a hospital’s reputation may spread by word of mouth, influencing patients, or their doctors or family members, to request treatment at better hospitals.

Whatever the cause, the results show that market forces favor hospitals that save more lives—a finding that could change the debate about how to best serve patients. “If we can figure out how to strengthen that process, how to get the bad ones to emulate the good ones, that’s what I’d like to see,” Syverson says.

In the US, the Affordable Care Act will give hospitals further incentives to become more productive, by offering financial rewards for those that score well on clinical outcomes and patient satisfaction surveys. In addition, hospitals with high rates of readmission and hospital-acquired infections will see their Medicare reimbursement rates cut. The challenge for hospitals will be making the shift from a system where they are paid for each procedure to a more balanced approach.

Recent years have seen fundamental debates about health-care provision, from the Obamacare tussle in the US to discussions of how to make government-provided health-care systems more efficient in Europe. The research by Chicago Booth faculty indicates that it isn’t necessary to revolutionize health-care systems to make immediate, meaningful improvements to consumers’ quality of life. There may be more-efficient ways to treat ailments faced by millions of people, such as heart attacks and cancer. The benefits from these recommendations can also be measured financially: the better people feel, the more productive they are, and the less they need to spend on medical services to cure their illnesses. That shift could not only improve the health of people around the world, it could also improve the health of their economies.

Your Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.