There’s a cycle in the finance world whereby good ideas become products, which sell well and spur demand for more good ideas. Current case in point: the cycle involving investable factors.

“Factors” is the catch-all term for the mechanisms that drive asset prices, and factors are being discovered almost as quickly as they can be packaged and sold to the waiting public.

The investment firms AQR Capital Management and Dimensional Fund Advisors are prominent factor users. The Maryland State Retirement and Pension System, a $52 billion plan that covers more than 400,000 workers and retirees, uses factor products. Many long/short equity hedge funds have incorporated factor hedging into their strategies, and the proliferation of low-cost exchange-traded funds and index mutual funds has made factor-style investing accessible to the retail market.

Fidelity, Vanguard, and BlackRock all offer online explainers of factors, and BlackRock has a head of factor-based strategies who proclaims on the company’s website that “factor investing is the way of the future.” “Institutional investors and active managers have been using factors to manage portfolios for decades,” reads BlackRock’s pitch. “Today, data and technology have democratized factor investing to give all investors access to these historically persistent drivers of return.” BlackRock’s site features an infographic that highlights 12 factors of importance, divided into two categories: macroeconomic (capturing broad risks across asset classes) and style (explaining returns in just one asset class).

And many dozens more are circulating, presumably keys to greater investment returns. Duke’s Campbell R. Harvey, Texas A&M’s Yan Liu, and University of Oklahoma’s Heqing Zhu have identified more than 300 factors in academic literature. City University of Hong Kong’s Guanhao Feng (a recent graduate of Chicago Booth’s PhD Program), Yale’s Stefano Giglio, and Booth’s Dacheng Xiu have collected and investigated over 100 of them—ranging from employee growth to maximum daily return.

But has the hunt for investable factors gone too far? Feng, Giglio, and Xiu are suggesting that it has. On one hand, factors are helpful. If an investor wants to have a truly balanced portfolio, she should do more than make sure she owns both stocks and bonds, and in theory she can use factors to make sure her investments truly represent a diverse basket of assets whose returns are driven by different things.

But are there really 300 separate characteristics associated with higher asset returns, or only a handful of things really driving stock prices? The hundreds of factors that appear in academic research are based on many possible company characteristics, which could, in theory, capture many types of risk. However, Feng, Giglio, and Xiu are skeptical that all these factors are useful. Two portfolios that look different from each other—for example, one that overweighs small companies and another that is heavy on illiquid companies—could end up giving investors exposure to the same underlying risk. That is, many of these factors may be redundant. Is the factor industry an example of a good idea that has gone too far?

Factors 101

Before there were 300 factors, there was just one, kind of. The original factor—or the forerunner of factor analysis—is, in a sense, the capital asset pricing model. Largely developed by Stanford’s William F. Sharpe, who was awarded the Nobel Memorial Prize in Economic Sciences in 1990, the CAPM posits that investors are paid for both the time value of their money and the risk they assume in holding an asset. The model has helped investors determine how much compensation they should demand for holding a risky asset.

The formula is not reliably predictive. It generates an expected return on assets based in part on the beta of a security, or its covariance with the market. Some research on the CAPM has suggested that low-beta stocks tend to have higher returns than predicted, while higher-beta stocks do not live up to expectations. Still, some finance professionals refer to the CAPM as “the one-factor model,” where market beta is the sole explanatory factor of expected returns.

In 1992, expanding on the CAPM, Chicago Booth’s Eugene F. Fama and Dartmouth’s Kenneth R. French published a three-factor model, identifying two more things that generate returns: size and value. They observed that small-cap companies outperformed large-cap ones over time, and companies with low price-to-book ratios outperformed companies with higher price-to-book ratios.

Investors generally accepted the Fama-French three-factor model, yet it still seemed incomplete to many adherents of Fama’s efficient-markets hypothesis. If markets are efficient and assets priced accordingly, a return is the compensation an investor receives for taking on some risk by holding an asset. Factors capture this risk, so when investors take on exposures to certain factors in their portfolios, they earn a risk premium as compensation. But some mutual-fund managers consistently outperformed or underperformed market benchmarks. Were they taking on risks beyond just three? The natural inclination of an efficient-markets believer, when confronted with an outperforming manager, is to assume that the manager is taking on and being paid for unquantified risks that are driving the asset returns. In 1997, Mark Carhart, who founded Kepos Capital, published an article that suggested momentum as a fourth factor.

We’re not saying those 300 factors are fake. It may be true that some deliver significant risk premia for investors. But they could also be simply duplicating a few other important factors.

—Dacheng Xiu

Between this point and 2016, researchers developed another 300 factors, some of which are based on the idea that investors’ biases and behaviors can affect asset prices. Take momentum, where the recent direction and slope of stock returns is used to predict a stock’s future returns. When behavioral economists contributed some factors, that caused some debate about whether some of those factors were still grounded in the efficient-markets hypothesis.

Even Fama and French contributed to the growing pile of factors. In 2013, Fama was awarded the Nobel Memorial Prize in Economic Sciences for his work on asset pricing. Less than two years later, he and French came out with research in which they suggest two more explanatory factors in stock returns: profitability (high-earnings-growth companies get higher returns) and investment (companies with high total asset growth typically generate lower-than-average returns).

Competition within academia is fueling a rush to discover or develop new factors for publication, says Fama. “You have thousands of academics in this area, all of whom need to come up with papers to publish, so the door is open wide.”

Close the barn door

But there’s now a notion that it’s time to partially close this door, or at least make sure researchers test new factors vigorously, using international stock market data and at least relating the intuition behind the factors to economic models, such as the dividend discount model.

Feng, Giglio, and Xiu suggest that many touted discoveries are mere subsets of the original three, or the more recent five, or perhaps a few other already-discovered factors. This redundancy can be tough for researchers to spot. Just as investors evaluate a mutual fund or hedge fund by comparing it to a benchmark index, researchers evaluate a new factor by comparing it to a benchmark factor model, to see if it produces an extra return. If researchers omit important factors from the model, new factors may appear to be useful, even though they may be simply replicating the ones that were omitted.

For example, size (as measured by a company’s market capitalization) is a long-accepted factor and component of stock returns. Small-capitalization stocks tend to outperform large-cap stocks over time. This suggests an investor who takes on extra risk by investing in smaller companies receives compensation for doing so.

But small-cap companies also tend to be less liquid than large-cap ones. So it’s reasonable to suggest that liquidity might also be a factor in returns, and our investor is also being compensated for holding less-liquid, and therefore riskier, assets. But in the case of stocks, these researchers hypothesize that liquidity may stand alone as a factor or it may, in fact, simply be the size factor with a different label.

“We’re not saying those 300 factors are fake,” says Xiu. “It may be true that some deliver significant risk premia for investors. But they could also be simply duplicating a few other important factors.” Xiu and his colleagues argue that many of these factors are either useless or account for return variations that have already been explained. “We think many of these newly proposed factors are redundant and are the result of somewhat arbitrary choices of existing factors as controls—one form of abusive data mining,” they write.

Torturing the data

Such mining may be prompted by the requirements of journals. Goodhart’s law, named after London School of Economics’ Charles Goodhart, says that “when a measure becomes a target, it is no longer a measure.” This could be what’s happening as factor hunters search too strenuously for factors that will adhere to the measures journals require for publication.

Specifically, journals require a new factor to have a probability value (P value) of less than 5 percent, meaning that a test shows that the factor will give off false positives fewer than 5 percent of the time. Since factor foragers can target the P value, they are susceptible, consciously or not, to data dredging and mining (presenting patterns within data as statistically significant without presenting a hypothesis to explain causality) or outright P-value hacking (setting up the sample to get the desired result).

Why explaining stock returns remains so complex

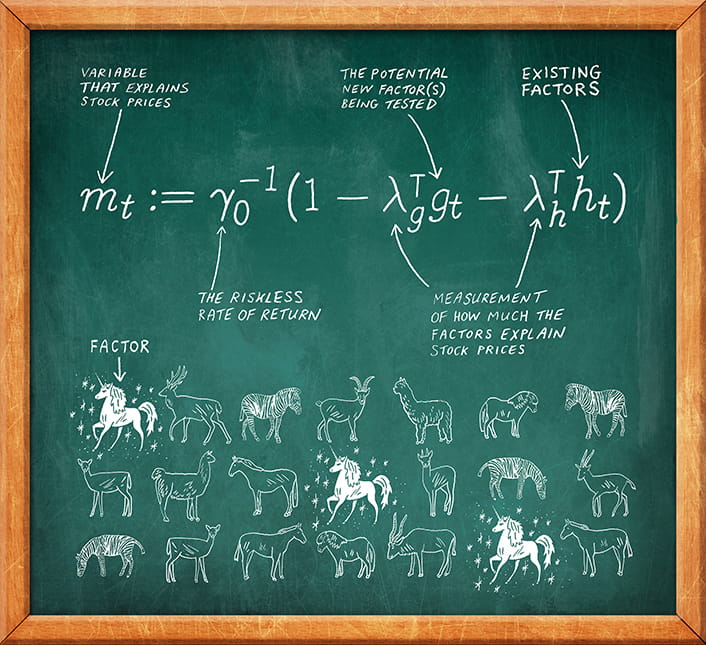

In the academic literature on predicting asset values, researchers have proposed hundreds of factors to explain an individual stock’s return.

Some researchers argue in favor of reducing the number of factors to a handful, the economic equivalent of searching for elementary particles in physics. But on the physics side, things did not get simpler as they got smaller, and they might not in finance either, suggest University of Michigan’s Serhiy Kozak, Chicago Booth’s Stefan Nagel, and University of Maryland’s Shrihari Santosh, who argue that the quest for simplification, while well-meaning, may be misguided.

“Our results suggest that the empirical asset-pricing literature’s multi-decade quest for a sparse characteristics-based factor model (e.g., with 3, 4, or 5 characteristics-based factors) is ultimately futile,” the researchers write.

They constructed a stochastic-discount-factor model, which pins down the factors that allow investors to earn a return premium—to analyze the predictive power of a large number of stock-return models. Factors in these models are attributes of companies or stocks that help explain a security’s performance. Size as a factor, for example, tells us that companies with small market capitalization outperform large-cap companies. Stocks with low price-to-book ratios outperform stocks with high ratios, and so forth.

The five-factor model proposed in 2016 by Chicago Booth’s Fama and Dartmouth’s French represents such a stochastic-discount-factor model, focusing on size, value, profitability, investment patterns, and market risk. But Kozak, Nagel, and Santosh argue that there is not enough redundancy to whittle things down to a handful of predictive factors.

The term “redundancy” has to do with factors that have different names but the same explanatory power. For example, a researcher might propose an “emerging-markets factor” that shows stocks in emerging markets outperform those in developed markets. But the same effect might be better explained by the size factor, as emerging-markets companies tend to have lower market capitalization than those in developed markets.

They test this and find that models based on a limited number of factors, including Fama-French from 2016, are not able to fully explain equity returns between 2005 and 2016. There must be something more at work, they suggest.

“Sparsity is elusive,” the researchers write. A model must include many factors to be complete. While they say they believe there is some redundancy out there within the world of factors, they suggest that models based on a half dozen or fewer factors will never fully explain stock returns. Complexity, they might say, is the most resilient factor of them all.

Serhiy Kozak, Stefan Nagel, and Shrihari Santosh, “Shrinking the Cross Section,” NBER working paper, November 2017.

“There is a saying that if you torture the data enough, eventually they will surrender,” says Xiu.

Most of the existing research papers that propose a new factor compare it to an existing benchmark, such as the Fama-French three-factor model, and find that their new factor is useful in explaining more variation in the expected return across stocks. But if researchers consistently compare their new factors to the same benchmarks, and not to other newly discovered factors, it’s possible they essentially keep “discovering” the same factor again and again but give it different names.

Alternatively, some researchers, instead of using the Fama-French factors as a benchmark, pick an arbitrary factor that makes their results look significant.

Xiu and his coresearchers instead propose using the entire set of existing factors (the factor zoo, as some finance academics have termed it) as a benchmark for newly proposed factors—a more robust way to test whether or not newly proposed factors really bring something new to the table. Thus, old factors could help determine if newly proposed factors are just old ones in fancy repackaging.

The researchers say the challenge of using the entire zoo is the curse of dimensionality, a well-known problem that could render standard methods used to evaluate new factors (such as a simple linear regression) infeasible to apply. To counteract this, they propose using a machine-learning method to select the best benchmark.

Using this method, they analyzed 99 factors, pitting factors discovered in 2010 and later against those published earlier to determine which newer factors are truly new. They find that a few of the newly proposed factors do seem to have some utility, notably the profitability and investment factors proposed by Fama and French, while many others fall short. Feng, Giglio, and Xiu find that 13 of the 99 factors hold some explanatory power, which is enough to keep the factor hunt going, but also a result that suggests using skepticism when assessing the hundreds of factors in the academic literature.

Developing more rigorous factors

Econometrics researchers have been testing and refining asset pricing models since the 1970s, but Feng, Giglio, and Xiu focus on newly proposed factors. They believe that the existing approach to finding factors will inevitably lead to mistakes or a proliferation of ideas that aren’t fully vetted.

“What we are trying to do is to propose a statistical methodology that can tame the factors or anomalies, and we suggest a more robust approach in trying to identify an important one,” says Xiu.

Is that new factor useful?

He says that the techniques they use to evaluate factor efficacy could be applied to mutual-fund and hedge-fund portfolios, so that investors would be able to quantify the sources of returns generated by their managers. For example, a portfolio manager investing in frontier markets might identify the risks associated with emerging economies as a factor that drives returns. However, if those frontier-market companies are also smaller than most, or undervalued, it might really be the size or value factors driving returns.

“There may be many different smart beta portfolios out there that seem distinct but are actually taking on the same risk,” says Xiu.

Another item of interest is whether or not a factor’s persistence is affected by its use. It could be that a factor’s wide adoption undermines its utility, as people crowd into previously unknown trades. Momentum, for example, is a well-established factor prone to such reversals. Price momentum can attract investment dollars that are abruptly withdrawn once the price of a stock has appreciated.

Feng, Giglio, and Xiu want to apply machine learning to asset pricing apart from taming factors or anomalies. After all, if the factor industry is one example of the finance profession cycling out of control, it probably isn’t the only one.

- Eugene F. Fama and Kenneth R. French, “A Five-Factor Asset Pricing Model,” Journal of Financial Economics, April 2015.

- ———, “Common Risk Factors in the Returns on Stocks and Bonds,” Journal of Financial Economics, February 1993.

- Guanhao Feng, Stefano Giglio, and Dacheng Xiu, “Taming the Factor Zoo,” Working paper, December 2017.

- Campbell R. Harvey, Yan Liu, and Heqing Zhu, “ . . . and the Cross-Section of Expected Returns,” Review of Financial Studies, January 2016.

Your Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.