If you applied for a mortgage, would you be comfortable with a computer using a collection of data about you to assess how likely you are to default on the loan?

If you applied for a job, would you be comfortable with the company’s human-resources department running your information through software that will determine how likely it is that you will, say, steal from the company, or leave the job within two years?

If you were arrested for a crime, would you be comfortable with the court plugging your personal data into an algorithm-based tool, which will then advise your judge on whether you should await trial in jail or at home? If you were convicted, would you be comfortable with the same tool weighing in on your sentencing?

Much of the hand-wringing about advances in artificial intelligence has been concerned with AI’s effects on the labor market. “AI will gradually invade almost all employment sectors, requiring a shift away from human labor that computers are able to take over,” reads a report of the 2015 study panel of Stanford’s One Hundred Year Study on Artificial Intelligence. But whether AI ultimately creates massive unemployment or inspires new, as-yet-unknown professional fields, its perils and promises extend beyond the job market. By replacing human decision-making with automated processes, we can make businesses and public institutions more effective and efficient—or further entrench systemic biases, institutionalize discrimination, and exacerbate inequalities.

These hopes and fears are becoming more salient as terabytes of data are paired with an increasingly accessible collection of AI software to make predictions and tackle problems in both the public and the private sectors. A vast technological frontier, AI has as many potential applications as human intelligence does—and is bound only by human imagination, ethics, and prudence. Research is helping to highlight some of the possibilities and hidden dangers inherent in it, paving the way for more-informed applications of powerful digital tools and techniques.

The growing ubiquity of AI

Though AI has connotations of technological wonder and terror—think sentient computers, à la HAL 9000 from 2001: A Space Odyssey—what companies are doing with it today is far more banal. They’re automating rules-based tasks such as loan processing. Tens of thousands of people working at banks were doing such jobs; now computers are taking over these roles.

“Even though there’s a lot of discussion of AI, what most businesses are doing as their first applications is really very simple,” says Susan Athey, a professor of the economics of technology at Stanford.

There are, of course, exotic business applications of AI, many of them partnered with cutting-edge hardware—self-driving trucks, surgical robots, smart assistants such as Siri and Alexa—that are primed to bring dramatic changes to transportation, health care, and other industries. But in the short run, AI will make itself felt in more subtle ways in industries such as finance, communications, and retail.

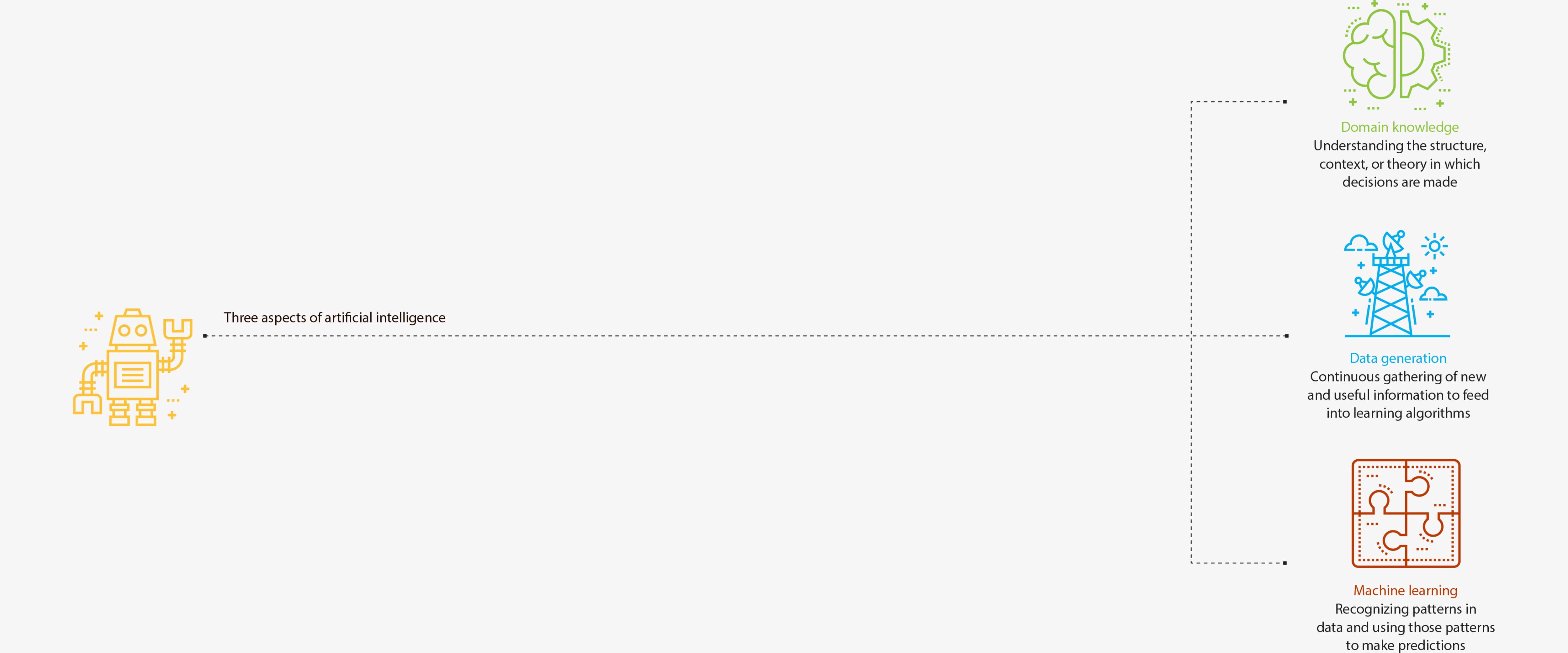

Taddy, 2019

Perhaps the most well-developed aspect of AI is the area of work known as machine learning, which involves recognizing patterns in data and using those patterns to make predictions. Thanks to computers that are able to process vast amounts of data through complex algorithms, those predictions can be honed to a high degree of accuracy. In this way, businesses can use their data to better anticipate customer or market behavior.

Take a question that confounds consumer-facing businesses such as online retailers and banks: Which customers are most likely to leave? Known as churn, the number of quitters is an important gauge of a company’s health, since customers who leave have to be replaced, often at great cost. If a company knew which customers to target with retention efforts, such as special offers or incentives, it could save considerable money.

That sort of insight is becoming more accessible to businesses of widely varying types and sizes thanks to cloud-based tools developed and sold by some well-known tech companies.

“Imagine if all companies of the early 20th century had owned some oil, but they had to build the infrastructure to extract, transport, and refine that oil on their own,” write Chicago Booth’s Nicholas Polson and University of Texas’s James Scottin their 2018 book, AIQ: How People and Machines Are Smarter Together:

Any company with a new idea for making good use of its oil would have faced enormous fixed costs just to get started; as a result, most of the oil would have sat in the ground. Well, the same logic holds for data, the oil of the 21st century. Most hobbyists or small companies would face prohibitive costs if they had to buy all the gear and expertise needed to build an AI system from their data. But the cloud-computing resources provided by outfits such as Microsoft Azure, IBM, and Amazon Web Services have turned that fixed cost into a variable cost, radically changing the economic calculus for large-scale data storage and analysis. Today, anyone who wants to make use of their ‘oil’ can now do so cheaply, by renting someone else’s infrastructure.

Recognizing myriad uses for AI, the European Commission announced last year that between 2018 and 2020 it would invest €1.5 billion in AI research and applications, including in “the uptake of AI across Europe” via “a toolbox for potential users, with a focus on small and medium-sized enterprises, non-tech companies and public administrations.”

The inclusion of public administrations in the commission’s announcement is an acknowledgment that machine learning and AI could be applied anywhere—in the public or private sectors—that people have large quantities of data and need to make predictions to decide how best to distribute scarce resources, from criminal justice to education, health care to housing policy. The question is, should they be?

The dangers of algorithmic autonomy

It’s an axiom of computing that results are dependent on inputs: garbage in, garbage out. What if companies’ machine-learning projects come up with analyses that, while logical and algorithmically based, are premised on faulty assumptions or mismeasured data? What if these analyses lead to bad or ethically questionable decisions—either among business leaders or among policy makers and public authorities?

One of the appeals of AI as a supplement or substitute for human decision-making is that computers should be ignorant of the negative and often fallacious associations that bias people. Simply participating in society can make it easy for people to absorb ideas about race, gender, or other attributes that can lead to discriminatory behavior; but algorithms, in theory, shouldn’t be as impressionable.

Yet, George Washington University’s Aylin Caliskan, University of Bath’s Joanna J. Bryson, and Princeton’s Arvind Narayanan find that machine-learning systems can internalize the stereotypes present in the data they’re fed. Applying an analytical technique analogous to an implicit association test—used to ferret out the unconscious connections people make between certain words and concepts—to a commonly used machine-learning language tool, the researchers find that the tool exhibited the same biases found in human culture. European American names were more closely associated with pleasant words than they were with unpleasant ones, in comparison to African American names, and female names were more closely associated with words that have familial connotations than with career-oriented words, as compared to male names.

“Our findings suggest that if we build an intelligent system that learns enough about the properties of language to be able to understand and produce it, in the process it will also acquire historical cultural associations, some of which can be objectionable,” the researchers write.

The concern that AI systems could be founded upon biased data goes beyond linguistic applications. A 2016 ProPublica investigation of an algorithmic tool used to assign “risk scores” to defendants during the bail-setting process finds that the tool was more likely to misidentify black defendants as being at high risk for recidivism than white defendants, and conversely, more likely to mislabel white defendants as low-risk. Although a follow-up analysis by the tool’s creator disputes ProPublica’s conclusions, the findings nonetheless reflect the possibility that if authorities rely on AI to help make weighty decisions, the tools they turn to could be inherently partial.

The problem of skewed data is further compounded by the fact that while machine learning is typically useful for making predictions, it is less valuable for finding causal relationships. This can create issues when it’s used to facilitate decision-making.

Chicago Booth’s Sendhil Mullainathan and University of California at Berkeley’s Ziad Obermeyer offer the example of machine learning applied to medical data, which can identify, for example, that a history of acute sinusitis is predictive of a future stroke. But that doesn’t mean sinus infections lead to cardiovascular disease. Instead, there is a behavioral explanation for their association on a patient’s medical record: the decision to seek care.

“Medical data are as much behavioral as biological; whether a person decides to seek care can be as pivotal as actual stroke in determining whether they are diagnosed with stroke,” the researchers write.

A well-intentioned hospital administrator might attempt to use AI to help prioritize relevant emergency-room resources for patients at the highest risk of stroke. But she could end up prioritizing patients simply most likely to seek treatment, exacerbating health-care inequality in the process.

“The biases inherent in human decisions that generate the data could be automated or even magnified,” Mullainathan and Obermeyer write. “Done naively, algorithmic prediction could then magnify or perpetuate some of [the] policy problems we see in the health system, rather than fix them.”

Similarly, businesses using algorithms to maximize revenue or profit could end up creating or exacerbating inequalities. Chicago Booth’s Sanjog Misra and Jean-Pierre Dubé worked with the job-search website ZipRecruiter to run online pricing experiments in order to learn about consumer demand, exploring data from ZipRecruiter in a study that used machine learning to test pricing strategy. (For more about this research, see “Are you ready for personalized pricing?” Spring 2018.) Machine learning holds promise for online businesses in particular because companies tend to have a large volume of data about their customers and website visitors. A company can write software to automate the design of experiments and the collection of customer traits. Using machine learning, the company can train algorithms, such as the regularized regression Dubé and Misra used at ZipRecruiter, “to figure out how a customer described by one of those observable variables responds to prices or advertising,” Dubé says. Over and over, the computers can run experiments to test different ads and price levels with customers, learning through trial and error what works and what doesn’t. Then the company can automate the process of making decisions about which ads or prices to show to which customers. What was once guesswork, or at best a lengthy procedure, now can be done rapidly and with accuracy.

The issue, Dubé says, is transparency: “It becomes a complete black box.” Without humans involved in the process, there are few checks on what the computers decide.

“Let’s suppose your targeting algorithm, purely based on statistics, starts finding segments of people you want to charge really high prices to, or people who should get less information, and let’s assume the group you’re effectively excluding now turns out to be a protected class of consumers,” Dubé says.

What if a retailer’s machine learning determined that people who live in poor, minority-dominated neighborhoods are less price sensitive when buying baby formula? The company might not recognize a problem with its efficient pricing strategy because the algorithm wouldn’t have information on a buyer’s race, only on income. But the result would be the same: racial discrimination.

Computer programmers could constrain the algorithm not to use race as a trait. “But there are plenty of other things that I could observe that would inadvertently figure out race, and that could be done in lots of ways, so that I would not be aware that it’s a black neighborhood, for instance,” Dubé says.

Cornell’s Jon Kleinberg, University of Chicago Harris School of Public Policy’s Jens Ludwig, Booth’s Mullainathan, and Harvard’s Ashesh Rambachan argue that more equitable results from machine-learning algorithms can be achieved by leaving in factors such as race and adjusting the interpretation of predicted outcomes to compensate for potential biases. The researchers consider the theoretical case of two social planners trying to decide which high-school students should be admitted to college: an “efficient planner” who cares only about admitting the students with the highest predicted collegiate success, and an “equitable planner” who cares about predicted success and the racial composition of the admitted group. To help with their decision-making, both planners input observable data about the applicants into a “prediction function,” or algorithm, which produces a score that can be used to rank the students on the basis of their odds of success in college.

Because race correlates with college success, the efficient planner includes it in her algorithm. “Since the fraction of admitted students that are minorities can always be altered by changing the thresholds [of predicted success] used for admission, the equitable planner should use the same prediction function as the efficient planner,” the researchers find.

If the equitable planner instead used a race-blind algorithm, he could end up misranking applicants as a result of underlying biases in the criteria considered for admission. White students might receive more coaching for standardized tests than other students do. And “if white students are given more SAT prep,” the researchers explain, “the same SAT score implies higher college success for a black student than a white one.”

The prosocial potential of AI

Despite concerns about biases, stereotypes, and transparency, AI could help businesses and society function better—inviting opportunities that are hard to ignore. In their study of the college-admissions scenario described above, Kleinberg and his coresearchers find that the right algorithm “not only improves predicted GPAs of admitted students (efficiency) but also can improve outcomes such as the fraction of admitted students who are black (equity).” Careful construction of the machine-learning algorithm could produce a freshman class that is both more qualified and more diverse.

Kleinberg, Ludwig, and Mullainathan, with Harvard postdoctoral fellow Himabindu Lakkaraju and Stanford’s Jure Leskovec, find in other research that judicial risk-assessment tools such as the one studied by ProPublica can be constructed to address numerous societal concerns. “The bail decision relies on machine learning’s unique strengths—maximize prediction quality—while avoiding its weakness: not guaranteeing causal or even consistent estimates,” the researchers write. Using data on arrests and bail decisions from New York City between 2008 and 2013, they find evidence that the judges making those decisions frequently misevaluated the flight risk of defendants (the only criterion judges in the state of New York are supposed to use in bail decisions) relative to the results of a machine-learning algorithm. The judges released nearly half the defendants the algorithm identified as the riskiest 1 percent of the sample—more than 56 percent of whom then failed to appear in court. With the aid of the algorithm, which ranked defendants in order of risk magnitude, the judges could have reduced the rate of failure to appear in court by nearly 25 percent without increasing the jail population, or reduced the jail population by more than 40 percent without raising the failure-to-appear rate.

What’s more, the algorithm could have done all this while also making the system more equitable. “An appropriately done re-ranking policy can reduce crime and jail populations while simultaneously reducing racial disparities,” the researchers write. “In this case, the algorithm is a force for racial equity.”

Stanford’s Sharad Goel, HomeAway’s Justin M. Rao, and New York University’s Ravi Shroff used machine learning to determine that New York could improve its stop-and-frisk policy by focusing on the most statistically relevant factors in stop-and-frisk incidents. The researchers note that between 2008 and 2012, black and Hispanic people were stopped in roughly 80 percent of such incidents, despite making up about half the city’s population, and that 90 percent of stop-and-frisk incidents didn’t result in any further action.

Focusing on stops from 2011 through 2012 in which the police suspected someone of possessing a weapon, the researchers find that 43 percent of stops had less than a 1 percent chance of finding a weapon. Their machine-learning model determined that 90 percent of weapons could have been recovered by conducting just 58 percent of the stops. And by homing in on three factors most likely to indicate the presence of a weapon—things such as a “suspicious bulge”—the police could have recovered half the weapons by conducting only 8 percent of the stops. Adopting such a strategy would result in a more racially equitable balance among those who are stopped.

Results such as these hint at the potential of AI to improve social well-being. Used judiciously, it could improve outcomes without costly trade-offs—reducing jail populations without increasing crime, for instance. But as AI comes of age, those who develop and rely on it will have to do so cautiously, lest it create as many problems as it solves.

- Julia Angwin, Jeff Larson, Surya Mattu, and Lauren Kirchner, “Machine Bias,” ProPublica, May 2016.

- Eva Ascarza, “Retention Futility: Targeting High Risk Customers Might Be Ineffective,” Columbia Business School research paper, July 2017.

- Aylin Caliskan, Joanna J. Bryson, and Arvind Narayanan, “Semantics Derived Automatically from Language Corpora Contain Human-Like Biases,” Science, April 2017.

- Jean-Pierre Dubé and Sanjog Misra, “Scalable Price Targeting,” Working paper, October 2017.

- “Artificial Intelligence for Europe,” Communication from the Commission to the European Parliament, the European Council, the Council, the European Economic and Social Committee and the Committee of the Regions, April 2018.

- Sharad Goel, Justin M. Rao, and Ravi Shroff, “Precinct or Prejudice? Understanding Racial Disparities in New York City’s Stop-and-Frisk Policy,” Annals of Applied Statistics, March 2016.

- Jon Kleinberg, Himabindu Lakkaraju, Jure Leskovec, Jens Ludwig, and Sendhil Mullainathan, “Human Decisions and Machine Predictions,” NBER working paper, February 2017.

- Jon Kleinberg, Jens Ludwig, Sendhil Mullainathan, and Ashesh Rambachan, “Algorithmic Fairness,” AEA Papers and Proceedings, May 2018.

- Sendhil Mullainathan and Ziad Obermeyer, “Does Machine Learning Automate Moral Hazard and Error?” American Economic Review, May 2017.

Your Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.