Did Fake News Swing the Election for Trump?

There’s reason to doubt fake news handed Trump the election.

Did Fake News Swing the Election for Trump?This past September, Facebook CEO Mark Zuckerberg said he’d been wrong to dispute the influence of fake news on the 2016 US presidential election, and research suggests there’s good reason for him to pay attention to the issue. Promoting fabricated news can be bad for business at social-media companies, according to Chicago Booth’s Ozan Candogan and University of Southern California’s Kimon Drakopoulos, whose research suggests that it’s critical for social-media platforms to help users identify fake news—as ignoring the problem could lead to substantially weaker user engagement.

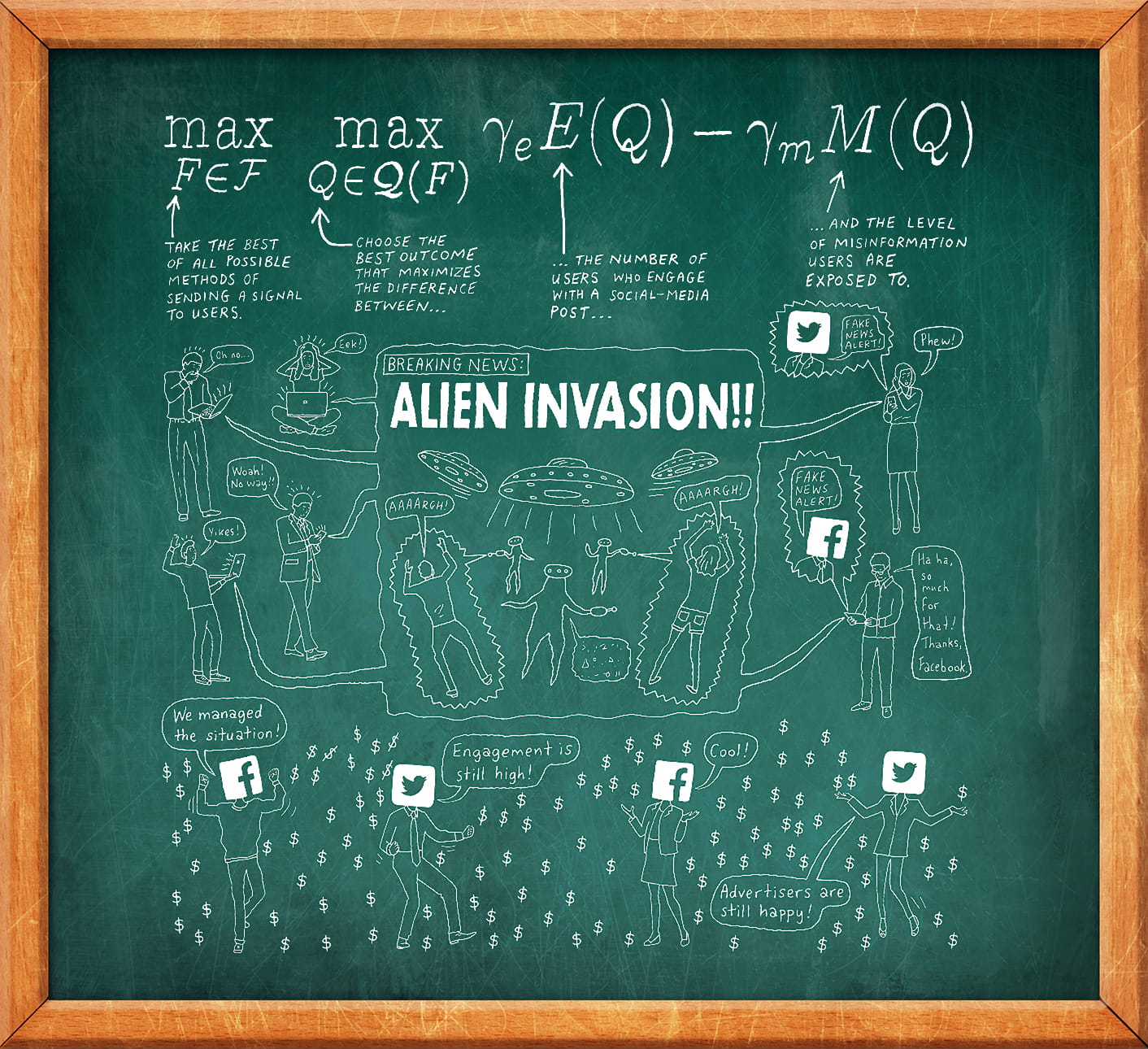

Many social-media websites struggle to maximize user engagement while minimizing the amount of misinformation shared and reshared. The stakes are high for Facebook, Twitter, and their rivals, which generate most of their revenue from advertising. Viral content leads to higher user engagement, which in turn leads to more advertising revenue. But content-management algorithms designed to maximize user engagement may inadvertently promote content of dubious quality—including fake news.

Candogan and Drakopoulos use modeling to test strategies for striking a balance between these conflicting goals. Their findings suggest that spreading misinformation poses a business risk for social-media platforms, as users might come to believe they can’t trust the information they see.

The researchers’ models assume platform operators can tell the difference between factual and fictitious posts. They demonstrate that engagement levels fall when users aren’t warned of posts that contain misinformation—to levels lower than when users are discouraged from clicking on the dubious material.

In the researchers’ models, clicks fell by more than half when platforms had a “no-intervention” policy. But while the models initially assumed that users were relatively indifferent to misinformation, when users were in fact concerned about engaging with erroneous content, failing to intervene led to an even greater drop in engagement, with the number of clicks dwindling to zero. The research also explored a sample of 4,039 de-identified Facebook users, created as part of a larger network analysis project at Stanford. The Stanford team collected information, including connections, from volunteer Facebook users.

“If the platform does not show any effort to help agents discern accurate content from inaccurate, i.e., uses a no-intervention mechanism, significant reduction in engagement can be expected,” Candogan and Drakopoulos write.

The research suggests that providing different messages about a post’s veracity to different types of users can help maximize engagement while significantly reducing fake news. The researchers identify two types of users: central users, who are connected to many friends or followers, and noncentral users, who are connected to fewer. For noncentral users, the pleasure they derive from clicking on the same things as their friends is more likely to be outweighed by a fear of engaging with misinformation. Therefore a platform can achieve high engagement levels by discouraging noncentral users from engaging with possibly inaccurate content while being more hands-off with the rest. On the other hand, platforms that make minimizing misinformation a top priority may find it beneficial to steer both types of users away from inaccurate content.

The Equation: How to signal fake news on social media

Candogan and Drakopoulos argue that companies need not bother with such distinctions if users of a network tend to have a similar number of connections. But in highly heterogeneous systems, in which users have personal networks of widely varying size, the warning levels can have a significant impact on engagement, according to the researchers.

This would apply to Facebook, where the average user has 338 friends and the median has just 200, according to the company. Twitter is even more extreme. One analyst estimated in 2013 that the median Twitter user had just one follower, compared with millions for pop star Beyoncé and US President Donald Trump.

A limitation of the research is the models’ assumption that the people using social networks and the algorithms running them know whether posts are true, false, or shaded somewhere in between. “This assumption is an approximation to reality, where a platform potentially has a more accurate estimate of the error than the agents,” Candogan and Drakopoulos write.

They suggest that further studies might look into trade-offs between promoting truth and promoting engagement in a dystopic scenario: where no one—neither networks nor users—knows what news is fake and what is real.

Ozan Candogan and Kimon Drakopoulos, “Optimal Signaling of Content Accuracy: Engagement versus Misinformation,” Working paper, October 2017.

Your Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.