In the beginning there were stories.

People think in stories, or at least I do. My research in the field now known as behavioral economics started from real-life stories I observed while I was a graduate student at the University of Rochester. Economists often sneer at anecdotal data, and I had less than that—a collection of anecdotes without a hint of data. Yet each story captured something about human behavior that seemed inconsistent with the economic theory I was struggling to master in graduate school. Here are a few examples:

- At a dinner party for fellow economics graduate students, I put out a large bowl of cashew nuts to accompany drinks while waiting for dinner to finish cooking. In a short period of time, we devoured half the bowl of nuts. Seeing that our appetites (and waistlines) were in danger, I removed the bowl and left it in the kitchen pantry. When I returned, everyone thanked me. But, as economists are prone to do, we soon launched into analysis. A basic axiom of economic theory is that more choices are always preferred to fewer—because you can always turn down the extra option. So, we asked ourselves, how is it that we’re all happy now that the nuts are gone?

- The chair of the University of Rochester Economics Department (and one of my advisors), Richard Rosett, was a wine lover who had begun buying and collecting wine in the 1950s. He had purchased some choice bottles for as little as $5 that he could now sell to a local retailer for $100. Rosett had a rule against paying more than $30 for a bottle of wine, and he did not sell any of his old bottles. Instead, he would drink them on special occasions. In summary, he would enjoy his old bottles worth $100 each, but he would neither buy nor sell at that price. Therefore, his utility of one of those old bottles was both higher and lower than $100. Impossible.

- My friend Jeffrey and I were given two tickets to a professional basketball game in Buffalo, New York, normally a 75-minute drive from Rochester. On the day of the game, there was a snowstorm, and we sensibly decided to cancel our plans. But Jeffrey, who is not an economist, remarked, “If we had paid full price for those tickets, we would have gone!” As an observation about human behavior, he was right; but according to economic theory, sunk costs do not matter. Why is going to the game more attractive if we have higher sunk costs?

I had a long list of these stories and would bore my friends with new ones as I acquired them. But I had no idea what to do with these stories. A collection of anecdotes was not enough to produce a publishable paper, much less a research paradigm. And, certainly, no one could have expected these stories to someday lead to a Nobel Prize. In this lecturei, I will brieflyii sketch the path that took me from stories to Stockholm.

The Kahneman and Tversky insight: Predictable bias

My first important breakthrough in moving from storytelling to something resembling science was my discovery of the work of Daniel Kahneman and the late Amos Tversky, two Israeli psychologists then working together at the Hebrew University of Jerusalem. Their early work was summarized in their brilliant 1974 paper in Science titled “Judgment under Uncertainty: Heuristics and Biases.” Psychologists use the term “judgments” for what economists often call estimates or forecasts, and “heuristics” is a fancy word for rules of thumb. Kahneman and Tversky had written a series of papers about how people make predictions. The phrase after the colon in the title succinctly captures the findings of these papers: people make biased judgments.

First, heuristics: faced with a complex prediction problem (say, “what is the chance this applicant will do well in graduate school?”), people often rely on simple rules of thumb to help them. An example is the availability heuristic: people judge how likely something is by how easy it is to recall instances of that type. But the key word in the subtitle, at least for me, is the last one: “biases.” The use of these heuristics leads to predictable errors. People guess that in the United States today, gun deaths by homicide are more frequent than gun deaths by suicide, although the latter are about twice as common. The bias comes because homicides are more publicized than suicides, and thus more available in memory.

The conclusion that people make predictable errors was profoundly important to the development of behavioral economics. Many economists were happy to grant that people exhibited “bounded rationality,” to use the term coined by the late Herbert Simon of Carnegie Mellon. But if bounded rationality simply led to random error, economists could happily go about their business assuming that people make optimal choices based on rational expectations. Adding an error term to a model does not cause an economist to break a sweat. After all, random errors cancel out on average. But if errors are predictable, departures from rational choice models can also be predictable. This was a crucial insight. It implies that, at least in principle, it would be possible to improve the explanatory power of economics by adding psychological realism.

Kahneman and Tversky in 1979 provided a second conceptual breakthrough with the prospect theory. Whereas their earlier research stream was about judgments, prospect theory was about decisions, particularly decisions under uncertainty. The theory is now nearly four decades old and remains the most important theoretical contribution to behavioral economics. It broke new ground in two ways. First, it offered a simple theory that could explain a bunch of empirical anomalies (and some of my stories), and second, it illustrated by example that economics needs two completely different types of theories: normative and descriptive. By normative here I mean a theory of what is considered to be rational choice (rather than a statement about morality). In contrast, a descriptive theory just predicts what people will do in various circumstances. The basic flaw in neoclassical economic theory is that it uses one theory for both tasks, namely a theory of optimization.

The von Neumann-Morgenstern expected utility theory is a classic example. It rigorously proved that if you want to satisfy some basic rationality axioms, you must maximize expected utility. And when I teach my MBA class in Managerial Decision Making, I urge students to make decisions accordingly. If you prefer an apple to an orange, you’d better prefer the chance p to win an apple to the same chance to win an orange! However, as Kahneman and Tversky demonstrated (and hundreds of follow-up papers have supported), people do not choose by maximizing expected utility theory. To predict choices, prospect theory works much better.

The lesson here is not that we should discard neoclassical theories. They are essential in both characterizing optimal choices and serving as benchmarks on which to build descriptive theories. Instead, the lesson is that when trying to build models to understand how people actually behave, we need a new breed of descriptive theories designed specifically for that task.

Understanding cashews: The planner and the doer

I have neither the tools nor the inclination to be a proper theorist and create mathematical models. My contributions to developing descriptive theories have been modest. My main offering has been the model of self-control that I developed with my first behavioral-economics collaborator, Santa Clara University’s Hersh M. Shefrin. The answer to the question “why were my dinner guests happy when the cashews were removed?” is obvious. We were worried that if the cashews were nearby, we would eat too many of them. In other words, we were concerned about the strength of our willpower.

Of course, there is nothing new in realizing that humans have self-control problems. Through figures from Adam and Eve to Odysseus, the ancients had much to say about weakness of will. By removing the nuts, we were using the same stratagem employed by Odysseus: commitment. When Odysseus sailed past the Sirens—whose songs were so delightful that men steered their boats toward them and crashed upon the rocks—Odysseus had his men tie him to the mast so he could not alter the course of the ship. Since the only danger we were facing was to our waistlines and appetites, we took less drastic action: we put the nuts in the pantry. Had they been more tempting, of course, we might have needed a more binding commitment, such as flushing them down the toilet.

Shefrin and I proposed a theory of self-control that models individuals as organizations with two components: a long-sighted “planner” and a myopic “doer.” In providing a two-self model, Shefrin and I were following in the footsteps of Adam Smith in The Theory of Moral Sentiments from 1759. He characterized self-control problems as a struggle between our “passions” and what he called our “impartial spectator.” In our model, the doer has fierce passions and cares only about current pleasure, whereas the planner is trying to somehow tame the passions and maximize the sum of doer utilities over time. The question is: How does the planner get the doer to be better behaved?

Although many of our findings might be considered intuitive, that intuition is absent in the perspectives of at least two groups: economists and business-school students.

Our approach was to borrow an existing theoretical framework, namely a principal-agent model (where the boss [the principal] tries to get her agents [workers] to act properly), and apply it to this new context of intrapersonal conflict.iii Both prospect theory and the planner-doer model are examples of what might be called minimal departures from neoclassical economic theory. Here, the planner is the principal and the doer is the agent. The planner has two tools at her disposal. First, she can employ commitment strategies where feasible. (These are similar to rules in an organizational context.) Had I been farsighted enough at that dinner party, I would have brought out the optimal number of nuts the first time, and let us keep eating until the bowl was empty. The other possibility is to try to get the doer to exert willpower, and the only tool the planner has for this strategy is something resembling guilt. (This is similar to “incentives” in the organizational setting.) If the doer can be made guilty enough, he will stop consuming before exhausting all available resources. The problem with this strategy is that it is costly. Guilt acts as a tax on consumption, reducing the pleasure from each bite. Still, commitment strategies may not be available or may be too inflexible, so in general we will see people use a mix of the two tools: commitment and guilt.

Mental accounting

One tool that organizations use to deal with agency problems is an accounting system, which, according to my Merriam-Webster dictionary, is “the system of recording and summarizing business and financial transactions and analyzing, verifying, and reporting the results.” The accounting system allows the principal to monitor the spending activities of his managers (agents) and offer appropriate incentives. Individuals and households adopt a similar strategy to handle their own financial affairs, which I have called mental accounting. For example, a family may have (real or notional) mental accounts for various household budget items: food, rent, utilities, etc. When it comes to saving for retirement, putting money into an account that is explicitly designated as “retirement savings” appears to make the money in that account more “sticky,” compared, for example, to an ordinary savings account.iv

An important feature of both traditional financial accounting and mental accounting is that money is not treated as fungible. If one puts labels on specific budget categories and adds rules—that money from one account cannot be used for something that belongs in another category, for instance—the assumption of fungibility is destroyed. This is no minor matter. Theories as basic to economic theory as Modigliani’s life-cycle hypothesis start with the premise that people are smoothing consumption from a lifetime stock of wealth, full stop. In this model, wealth has no categories. But people and organizations do create categories. Everyone who has ever worked in a large organization has faced the crisis that some end-of-year expenditure in one category must be postponed because that account is exhausted, while at the same time a spending spree is going on in another account that is overflowing with funds that will be lost at year’s end. Households are the same. If there was an expensive outing to the theater early in the week, a family is less likely to go out to dinner on the weekend but might decide to take a shopping trip to the mall.

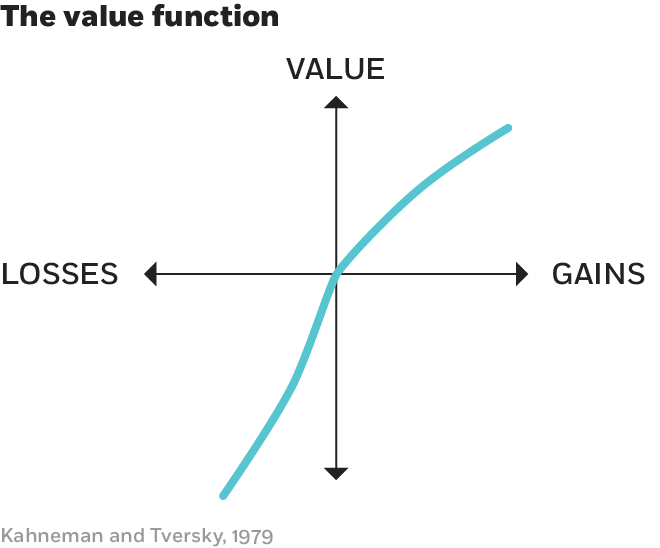

Mental accounting also plays a role in filling in some gaps in prospect theory. A key element of prospect theory is that what makes people tick are changes in wealth rather than levels. Traditionally, expected utility theory was based on a formulation U(W), where W is lifetime wealth. Kahneman and Tversky replace this utility of wealth function with a value function v(•) (see Figure 1) that depends on changes to wealth rather than levels. The idea is that we adapt to our current standard of living and then experience life as a series of gains and losses. But gains and losses relative to what reference point? Kahneman and Tversky did not fully answer this question.

Figure 1

Aside from the fact that the function is defined over gains and losses, the curve has two other notable features. First, both the gain and loss function display diminishing sensitivity. The difference between $10 and $20 seems larger than the difference between $1,010 and $1,020. Second, the loss function is steeper than the gain function; losses hurt more than gains feel good (loss aversion). Notice that this feature immediately offers an explanation for the difference in the buying and selling prices illustrated by Professor Rosett’s wine-buying habits, what I have called the endowment effect: I demand more to sell an object than to buy it because giving it up would be coded as a loss.

A key question I long pondered was: When is a cost coded as a loss? If I go down to the cafeteria to spend $10 for lunch at the usual price, the money I spend does not feel the same way as losing a $10 bill from my pocket. Normal transactions are hedonically neutral: I give up some money and get something back in return. However, suppose I am attending a sporting event and buy a sandwich that is priced at twice what I would usually pay. That does feel like losing. I use the term “transaction utility” to capture the pleasure or pain that a consumer receives from paying more or less than the expected price for some good.

This framework helps us understand why sunk costs influence behavior. When a family spends $100 to buy tickets in advance of some event, the purchase will create neither pleasure nor pain so long as the price is equal to the expected price. However, if there is a snowstorm, there is a $100 purchase that now has to be “recognized,” and it will then be experienced as a loss. This helps explain why someone can think that going to the event is a good idea—it eliminates the need to declare the original purchase as a loss.v It can also offer some insights into gigantic examples of the sunk-cost fallacy exhibited by governments. When I was thinking about these issues, the US government’s continued involvement in the Vietnam War seemed best explained in these terms. Organizational psychologist Barry M. Staw of the University of California at Berkeley in 1976 wrote an important paper on this theme titled “Knee-Deep in the Big Muddy,” a title he borrowed from the late folk singer Pete Seeger. And we all know people and organizations that have continued down the wrong path because they had “too much invested to quit.”

A related topic I think deserves more attention is what might be called mental accounting theory. Some of the types of questions I have in mind were addressed in a paper I wrote with Columbia’s Eric J. Johnson in 1990. One issue is the process that Kahneman and Tversky called “editing.” Suppose I gain $50 in the morning and $100 in the afternoon. Do I process that as v(50) + v(100) or v(150)? One possibility that Johnson and I considered is that people would edit events to make themselves as happy as possible, a hypothesis we called hedonic editing. In the example, since the value function is concave for gains, v(50) + v(100) > v(150), people should segregate gains (treat each one as a separate event). In contrast, since the loss function is convex, losses should be integrated (combined).

Although this hypothesis is theoretically appealing, our subjects soundly rejected it. In particular, contrary to the theory, people preferred to segregate rather than integrate losses. This was true for both small and large losses, and for related and unrelated events. Another question we investigated is the role of prior gains and losses. What happens when someone wins money early in an evening of gambling? We found evidence of a house money effect: when ahead in the game, people became more risk seeking for gambles that did not risk losing all of the prior winnings.vi This is another example of the need for better theories of how people choose a reference point and categorize gains and losses. (See work by Central European University’s Botond Koszegi and Harvard’s Matthew Rabin for one promising approach.)

Fairness

Stories and anecdotes about fairness began a project undertaken with Kahneman and Simon Fraser University’s Jack L. Knetsch during the 1984–85 academic year, when I was visiting Kahneman at the University of British Columbia. We turned the stories into hypothetical questions posed in telephone surveys to study a topic that had not received much attention in economics: What actions by firms do people consider to be “fair”? The research was made possible because we had access to a telephone-polling bureau sponsored by the Canadian government. In those days before Amazon Mechanical Turk, we had what we considered a great luxury: the ability to ask three versions of five questions each week to a random sample of Canadians, getting back about 100 responses to each version of our questions. This question was typical:

A hardware store has been selling snow shovels for $15. The morning after a large snowstorm, the store raises the price to $20.

Rate this action as: completely fair, acceptable, somewhat unfair, or very unfair.

In our sample, 82 percent of the respondents found the action somewhat unfair or very unfair. (In the paper, we collapsed the responses into just two categories: acceptable and unfair.)

Over the course of about six months, we asked hundreds of questions with multiple versions to make sure there was nothing special about, say, snow shovels. To be clear, our goal was not to say anything about what “is” or “should be” fair. Rather, we were only trying to discern what citizens consider to be unfair; that is, we were after a descriptive model of fairness. Put simply, what actions by firms make people angry? One key factor is whether people think the firm is taking away something to which people believe they are entitled—such actions are coded as losses. We learned that many subtleties influence whether an action is considered to be imposing a loss.

Charging a surcharge to use a credit card is considered more unfair than offering a discount to those who pay in cash. A wage increase of 5 percent during a period of 12 percent inflation is acceptable, but a 7 percent pay cut in a zero-inflation environment is unfair.

Although many of our findings might be considered intuitive, that intuition is absent in the perspectives of at least two groups: economists and business-school students. To an economist, raising the price of snow shovels after a snowstorm is the obviously correct decision, allowing the market to efficiently allocate the shovels to those who value them most. Students in my MBA classes agree. In a recent class to which I posed this question, 72 percent found raising the price to be acceptable. This is not surprising since that was the correct answer in their microeconomics class.

In contrast, smart businesses have learned these lessons. After a hurricane or other natural disaster, big-box retailers such as Walmart and Home Depot offer emergency supplies such as water and plywood at discount prices (or free) in the affected region. They are smartly playing a long game: maintaining a reputation for fair dealing after the crisis will assure they receive a good share of the business to come when the process of rebuilding begins. Other businesses can stub their toes in such situations. For example, Uber allowed its surge-pricing algorithm to raise prices to high multiples of the usual price during a snowstorm in New York. They were later sued by the New York State attorney general for violating a law that bans “unconscionably excessive prices” and agreed to a settlement in which surge pricing is capped during emergency situations. For a company that is fighting regulatory battles to gain entry in markets all around the world, making people mad is unlikely to be a wise business strategy.

If there is anywhere in the economy where neoclassical economics should be an accurate description of reality, it should be on Wall Street.

A natural question that interested Kahneman, Knetsch, and myself was whether people would be willing to punish firms that behaved in ways they considered unfair. We designed an experiment to test this hypothesis. One participant, the proposer, was given some money, say $10, and told she could offer any share of that money to another anonymous participant with which she had been randomly paired. That participant, the responder, had two choices. He could accept the offer, in which case the proposer would get the rest, or reject it, in which case both participants would get nothing. In a world of rationally selfish participants, responders would take any positive offer, and proposers, knowing this, would maximize their earnings by offering the smallest possible amount, in our case 25 cents. In contrast, we found that offers of less than $2 were likely to be rejected. We also found, to our dismay, that experiments using this setup had already been published (and called the Ultimatum Game) by Max Planck Institute of Economics’ Werner Güth and colleagues in 1982.

In the time since our experiments were run, fairness has become a topic of considerable interest to economists, with many new experimental findings and theories offered to explain the results, most notably by Rabin in 1993, University of California at Santa Barbara’s Gary Charness and Rabin in 2000, and University of Zurich’s Ernst Fehr and Ludwig Maximilian University of Munich’s Klaus M. Schmidt in 1999.

Mugs

Knetsch and John Sinden in 1984 published the first clean experimental test of the endowment effect. The design was delightfully simple. When subjects entered the lab, they were selected at random to receive either $3 or a lottery ticket. After completing some other tasks, they were given the option of trading their initial gift for the other one. In other words, everyone was asked, essentially: Would you rather have $3 or a lottery ticket? Since the initial allocations were done at random, it should not have mattered which item people received originally. To trade, subjects just had to raise their hand, so transaction costs were trivial. Nevertheless, there was a strong endowment effect. Of those initially given a lottery ticket, 82 percent chose to keep it, and 62 percent of those given the $3 would not give it up.

Kahneman and I thought this experiment was pretty convincing, but at a conference we attended organized by Stanford’s Alvin Roth in 1984, the experimental economists Charles Plott of the California Institute of Technology and Vernon Smith of Chapman University challenged these results. For them to be convinced, they said, it would be necessary to run an experiment with two features: 1.) subjects had to have an opportunity to learn, and 2.) they had to interact in markets. Kahneman and I joined forces with Knetsch to see if we could meet this challenge.

We had an additional goal with this experiment. Smith in 1976 had pioneered and advocated a methodology called the induced-value procedure. He used the method to test if people trade rationally when they participate in a market, and showed that they do. But we suspected that the induced-value markets were pretty unique, and that markets for regular goods would work differently.

In an induced-value market, participants trade tokens. Each participant is “induced” to have a specific value for a token by being told that they can trade a token held at the end of the experiment for the value they have been assigned. The idea is to see whether markets get people who have been assigned low values for their token to sell it to someone with a higher value but no token. And indeed, these induced-value markets work just as they do in an economics textbook. We suspected this might not be true for other objects for which people have not been assigned a value and instead have to decide what it is worth to them, the way they do when they go to the store.

An experiment conducted by Kahneman, Knetsch, and myself in 1990 had three stages. In the first stage, we assigned one token each at random to half of the 44 participants in the experiment, and everybody was assigned an induced value to a token. Subjects who were given a token were asked to indicate across a declining series of prices whether they would sell their token or keep it. Similarly, those without a token were asked at which prices they would be willing to buy one. Induced values and prices were set so participants were never indifferent about a trade. We ran this market three times to make sure the subjects understood the task. (Subjects were told one round would be picked at random to count and payoffs would be made.) On each trial, both the price and number of trades were exactly as predicted by the induced supply-and-demand curves. Economics 101 was true!vii

We then switched from induced values to real goods. We distributed at random 22 coffee mugs embossed with the insignia of Cornell University (where the experiment was being run) to half of the subjects. Both mug owners and nonowners were instructed to inspect the mugs and decide whether they wanted to take one home with them. We then conducted exactly the same market that we did for the induced-value trials. In this case, the Coase theorem implies that, on average, we would observe 11 trades. That is because the mugs should have ended up in the hands of the 22 subjects who valued them most, and with random assignment, on average 11 of those people would not have received a mug in the initial assignment. Again, the market was run four times to allow for learning, with one trial picked at random to “count.”

Unlike for the induced-value trials, the results were not as predicted by standard theory. Rather than 11 trades in each of the four trials, the numbers we observed were 4, 1, 2, and 2, respectively. The low volume of trading had a simple explanation: loss aversion. The median reservation price that mug owners demanded to give up their mug was between $4.25 and $4.75, whereas the median buying price for nonowners varied between $2.25 and $2.75. The same experiment was then repeated, this time with high-quality pens. The subjects that had (randomly) not received a mug got a pen in this trial. The results were similar: there were either 4 or 5 trades (not 11), and willingness to accept was roughly twice the willingness to pay.

We drew two primary conclusions from this study. First, induced-value experiments are special. In the real world, no one tells us what objects are worth to us, giving us the flexibility to misbehave. Second, the endowment effect is real, even with markets and opportunities to learn.

Finance

The efficient-markets hypothesis, first formulated by my Chicago Booth colleague Eugene F. Fama, dominates nearly all the research, both theoretical and empirical, in the field of financial economics. The hypothesis has two components that I like to refer to with the terms “no free lunch” and “the price is right.” The no-free-lunch component says that it is impossible to predict future stock prices and earn excess returns except by bearing more risk. The price-is-right component says that asset prices are equal to their intrinsic value, somehow defined.

Most economists thought that finance was the least likely field of economics where one could expect to find evidence to support behavioral theories. After all, the stakes are high, trading is frequent (which facilitates learning), and the markets are fiercely competitive. If there is anywhere in the economy where neoclassical economics should be an accurate description of reality, it should be on Wall Street. Of course, this fact made delving into the field extremely tempting. To me, finance looked like a big bowl of cashews, and I was hungry.

No free lunch

In the early 1980s, I taught a Belgian graduate student named Werner De Bondt, whom I managed to get interested in behavioral economics. I had met him when he spent a year at Cornell as an exchange student. I urged him to return to pursue a PhD, which he agreed to do on one condition: his real passion was for finance, so he wanted to do a thesis on that topic. I said, sure, we could learn the field together (mostly him teaching me).

When we began working together, the efficient-markets hypothesis was in its heyday. Writing in 1978, Michael Jensen, then at the University of Rochester, declared, “I believe there is no other proposition in economics which has more solid empirical evidence supporting it than the Efficient Market Hypothesis.” Ironically, this was the first sentence in an issue of the Journal of Financial Economics that was devoted to “anomalies.” These anomaly papers reported predictable returns after firm announcements such as earnings surprises, dividends, and stock splits, as well as peculiarities in the markets for stock options. Still, confidence in the hypothesis was strong.

One strand of research attracted De Bondt’s and my attention, namely the so-called value anomaly. Research by the late Benjamin Graham of the University of California at Los Angeles and others going back to the 1930s seemed to show that a simple strategy of buying stocks with low price-to-earnings ratios seemed to “beat the market.” One snag with this research, which was then out of fashion, was that earnings was a number constructed by a firm’s accountants—and who was to know what mischief might lie buried in those calculations? Our goal was to do some test that would be free of any accounting.

We also had a more audacious goal. We wanted to predict a new anomaly. At that time, most of the anomalies published in the literature were embarrassing facts researchers had stumbled upon. For example, after an unexpectedly good earnings report, stock prices go up. No surprise there. But researchers had found that after one earnings surprise, the same firm continued to have positive earnings surprises in future quarters, each of which was rewarded with a rise in price. No one would have dared predict this result, but many studies had documented it. We wanted to predict something new, and do so using some of the psychology we had learned from Kahneman and Tversky.

Our idea was based on their paper “On the Psychology of Prediction.” One of their findings was that people are willing to make extreme forecasts based on rather flimsy evidence. Here is an example: one study asked participants to predict students’ grade-point averages (GPAs) based on some information. There were three conditions. In one condition, the participants were told each student’s percentile GPA; in a second, they were told the percentile score on a test of mental concentration; and in a third, they were told the percentile score on sense of humor. Not surprisingly, and quite reasonably, the participants were willing to make quite extreme forecasts of GPA when given a student’s percentile GPA. But somewhat shockingly, they were willing to make almost as extreme a forecast based on a test of sense of humor. I don’t know about you, but when I was in school, I never found that jokes helped my grades—and it was help that would have come in handy for me!

One way to describe this finding is that subjects overreacted to whatever information they happened to look at. This led to our hypothesis: might the same be true for stocks? Our test could not have been simpler. We (meaning Werner) combed through the historical data of stocks listed in the New York Stock Exchange (which at that time had most of the large companies) and ranked stocks based on their past returns over time periods from 3 to 5 years. Then we formed portfolios of the most extreme (say 35) winners and losers, and tracked them for the same period going forward.

The efficient-markets hypothesis makes a clear prediction here. The future returns on the two portfolios, the winners and the losers, should be the same, since you are not supposed to be able to predict changes in stock prices from past returns. In contrast, our prediction was that the losers would do better than the market and the winners would do worse than the market. Why? We figured that people would overreact to the stories surrounding losers (to get into our sample of big losers, there had to be multiple stories) and avoid them like the plague, which is what caused them to be losers in the first place. But then, going forward, if the company merely performed a bit better than it had in the past, investors would be pleasantly surprised, and prices would go up. We expected the reverse for winners, who would be unable to live up to the high expectations that went with being a long-term winner. This result was strongly confirmed. For the results based on five-year formation periods, the loser portfolio had subsequently outperformed the market by a cumulative 46 percent over the following five years, while the winners had underperformed by 19 percent. Similar results held for other tests.

This result created a controversy among financial economists. Many Booth graduate students were assigned the job of finding De Bondt’s programming error, the existence of which was the leading hypothesis offered to explain our results. But there were no programming errors. De Bondt, now at DePaul University, is meticulously careful.

Another explanation for our result is that the loser portfolio was riskier than the winner portfolio. However, we had anticipated this critique and had calculated the conventional risk measure in use at the time, the capital asset pricing model beta, and by this measure, the winners were riskier than the losers, deepening the puzzle. In a follow-up paper, we demonstrated that the loser portfolio had unusual (and attractive) risk characteristics. In months when the market went up, the loser portfolio had a high beta (1.39), and in months when the market went down, it had a low beta (.88).viii This implies that the loser portfolio went up faster than the market in good months and fell more slowly than the market in down months. That seemed pretty attractive to us.

The price is right

There has been less attention paid to the price-is-right component of the efficient-markets hypothesis, in part because intrinsic value is so hard to measure. However, there are special cases where this issue can be tested based on the most basic principle in finance: the law of one price. This law says that identical assets must sell for the same price (up to the cost of trading). If the law were violated, then it would be possible to make an infinite amount of money through arbitrage: buy at the low price and simultaneously sell at the high price. One thing could stop this: what Harvard’s Andrei Shleifer and Chicago Booth’s Robert W. Vishny have called limits to arbitrage, for example, an inability to sell a security short. If you can’t arbitrage and people are irrational, then the law of one price can be violated.

I have studied two such cases. One investigated closed-end mutual funds, which are a special kind of fund in which investors invest by buying shares that trade in markets such as the New York Stock Exchange. The odd thing about these funds is that the shares often trade at prices that differ from the value of the underlying shares they own, a clear violation of the law of one price. The primary contribution of the paper by De Bondt and me was to show that the average discount on such funds is correlated with the difference in returns between small and large firms. We argued that since individual investors disproportionately hold shares in closed-end funds and stocks of small companies, the discounts on the funds can be explained by changes in individual investor sentiment. For reasons that remain a mystery to me, our paper did not sit well with my Booth colleague Merton Miller. His dissatisfaction led to a four-part debate in the Journal of Finance.

Harvard’s Owen Lamont and I wrote a considerably more obnoxious paper titled “Can the Market Add and Subtract?” which would have undoubtedly raised Miller’s hackles even more had he been alive to read it. We documented several cases in which a subset of a firm was valued in the market at more than the entire firm. The featured example involved the profitable technology firm 3Com during the heyday of the technology boom. The managers of 3Com were unhappy that their shares were not soaring like other technology companies, and the firm decided to divest itself of one of its sexiest assets, a company called Palm that had been previously acquired in a merger. On March 2, 2000, 3Com sold a fraction of its stake in Palm to the general public in what is called an equity carve out. About 5 percent of the shares of Palm were sold in an initial public offering, and the remaining shares would be distributed to 3Com shareholders in a few months after a routine approval from the Internal Revenue Service. At that point, each shareholder of 3Com would get approximately 1.5 shares of Palm, which would become an independent company.

Believers in the efficient-markets hypothesis would be mildly puzzled by 3Com’s strategy. After all, why should Palm be worth more outside of 3Com than in?ix Nevertheless, the strategy seemed to work. Upon the announcement of the plan, the shares of 3Com soared from about $40 to more than $100. And when the shares of Palm started trading after the IPO, the price went from $38 in the initial offering to $95 at the end of the day. Here is where the law of one price comes into play. Since each 3Com shareholder would soon get 1.5 shares of Palm, in a rational market the price of 3Com would have to be at least 1.5 times the price of Palm (since equity prices can never be negative). If you multiply $95 by 1.5, you get about $143, so the 3Com shares should have sold for at least that much. But at the close of trading, the price of 3Com was just $82, meaning the market was valuing the remaining part of 3Com at minus $23 billion!

How could this happen? There were limits to arbitrage. The smart trade would be to sell shares of Palm short and buy an offsetting number of shares of 3Com, but it was hard to borrow the shares of Palm that would be necessary to sell the stock short. This prevented arbitrageurs from quickly eliminating the disparity that lasted for months. Maybe given the difficulty of shorting, there was no free lunch, but the market cannot possibly be called rational or efficient. There were two ways to obtain shares of Palm, either by buying the shares directly or by buying shares of 3Com and getting shares of Palm thrown in. For some reason, some investors preferred the “pure” Palm shares and were willing to pay a premium to not get the rest of 3Com in the package.

Behavioral firms

Perhaps the greatest practitioner of the rational choice model was the great, late Chicago Booth price theorist Gary S. Becker. In his Nobel lecture, Becker conceded that he had tried to explore the boundaries of where the rational model can be applied, such as his work on marriage, divorce, crime, and even rational addiction. “My work may have sometimes assumed too much rationality, but I believe it has been an antidote to the extensive research that does not credit people with enough rationality.” Although we had a cordial relationship during the many years we overlapped at the University of Chicago, he probably put me into the camp of those who do not credit people with enough rationality. In an interview in the University of Chicago alumni magazine, Becker offered the following take on behavioral economics: “Division of labor strongly attenuates if not eliminates any effects [caused by bounded rationality.] . . . It doesn’t matter if 90 percent of people can’t do the complex analysis required to calculate probabilities. The 10 percent of people who can will end up in the jobs where it’s required.” I have called this the Becker conjecture. This conjecture makes a clear prediction: the behavior of firms will fit that rational choice model even if consumers do not. Thus many papers assume firms are sophisticated and consumers are naive and then see what happens. But Becker’s conjecture is an empirical hypothesis: Do managers of firms behave like Beckerian agents?

This can be a difficult hypothesis to test, in part because many of the decisions made by firms are not observable. For example, an employee at Eastman Kodak inventedx and received a patent for the first digital camera, but Kodak managers did not think the idea was worth exploring. Many years later, Kodak declared bankruptcy because of the decline in the market for film. Is this a combination of bad luck and hindsight bias or bad decision-making? It is hard to know. To do a systematic investigation of firm decision-making, we would need a data set containing numerous decisions and accompanying outcome data.

One domain in which this kind of research can be done is professional-sports data. For example, UC Berkeley’s David Romer wrote a 2006 paper titled “Do Firms Maximize?” that studied the decisions of National Football League teams in a class of situations that occur frequently (whether to “go for it” or punt on fourth down). He finds that teams make frequent errors (they punt too often). NFL teams are valued at over $1 billion, and the annual revenue for the league exceeds $16 billion, so this seems to qualify as “big business.” University of Pennsylvania’s Cade Massey and I have used the same domain to study a different type of decision, this time regarding the market for players.

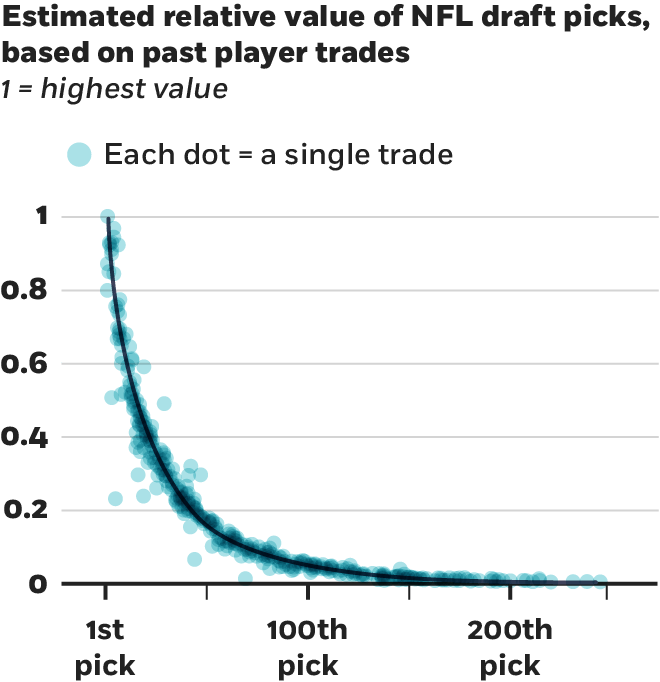

Figure 2

Massey and Thaler, 2012

Teams in the NFL select players using an annual draft. Teams take turns choosing eligible players (primarily those who played collegiate football), with the team that holds the worst record from the previous year choosing first, and the champion choosing last. The draft lasts seven rounds. Crucially for our study, picks can and are frequently traded, so we were able to compute the market value for picks using 25 years of data and over 1,000 trades. This analysis revealed that teams value picking early very highly. As shown in Figure 2, which shows the estimated relative price of picks based on past trades, the first pick is valued about the same as the seventh and eighth picks combined, or six second-round picks. Furthermore, the players chosen with early picks are much more highly paid than later picks (according to NFL rules), so they are expensive both in terms of salary and in the opportunity cost of trading for later picks. We tested whether this market is efficient and soundly rejected that hypothesis.

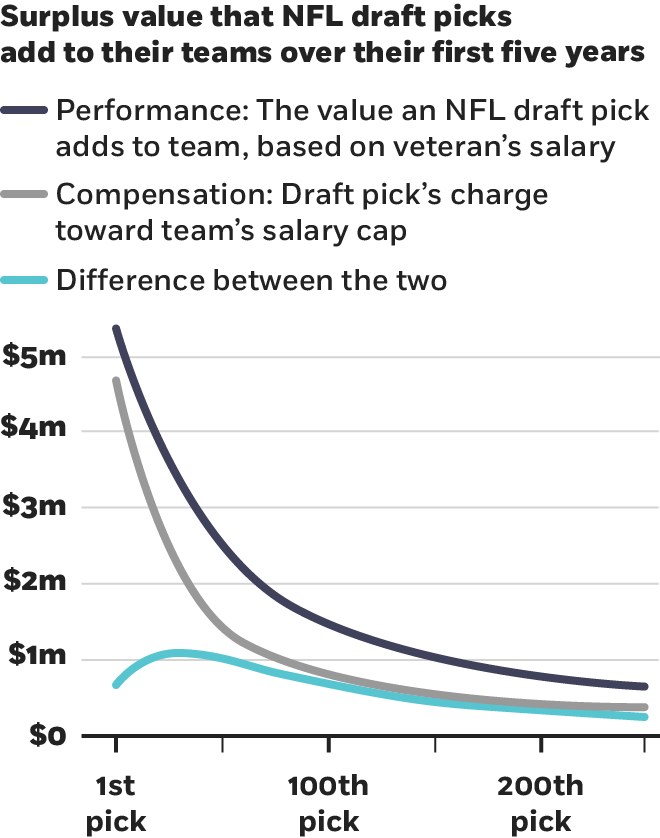

A key feature of the NFL is a salary cap: a limit on how much teams can spend on players. This is unlike European soccer or American baseball. The implication of the salary cap is that teams can only succeed by acquiring players that are bargains—that is, that provide greater player quality than their salary represents. The difference between the value a player provides to his team and his compensation is the surplus the team receives. In an efficient market, the surplus of players would have the same shape as the market value for picks shown in Figure 2, but this is not the case.

Figure 3

Massey and Thaler, 2012

Indeed, as shown in Figure 3, the surplus value of picks does not decline sharply during the first round. Instead, we find that the players who provide the highest surplus to their teams are those picked early in the second round, picks that teams value at less than 20 percent of the first pick, based on the trades they make. We attribute this anomaly to overconfidence. Teams (that is, owners and managers) overestimate their ability to distinguish between great players and good players, and so put too high a price on picking early. Although teams seem to gradually be getting better at Romer’s fourth-down decision-making (though it is still dreadful), they show no signs of improving in their draft-pick trading. NFL owners and general managers, it seems, are not in Becker’s top 10 percent.xi

Nudging

My original interests in economics, including my PhD thesis on how to calculate the value of saving lives in cost-benefit analyses, were based on public-policy questions; but when I began studying the combination of psychology and economics, I deliberately stayed away from policy issues. I did so because I wanted behavioral economics to be perceived as primarily a scientific rather than political enterprise. Some of the original resistance to the research came from economists who feared that our findings would be used to support intrusive government interventions.

The research on self-control was particularly fraught with this danger. Many of the laissez-faire economic policies advocated by economists such as the late Milton Friedman were based on the notion of consumer sovereignty—that is, that no outsider can know an individual’s preferences better than the person himself. Furthermore, the concept of revealed preference proposed by the late Paul Samuelson of MIT stated that preferences are essentially defined by what we choose. If Alan chooses ice cream over salad, then it follows that he prefers ice cream to salad. It is the same line of thinking that led Becker and Booth’s Kevin M. Murphy to argue that because addicts choose to be addicts, they must prefer to be addicts.

How, then, can we make sense of my moving that bowl of cashews out of reach? Did my friends prefer to eat them or not? And if people sometimes do things that they later regret (having one too many drinks the night before, buying something on sale, etc.), what are their true preferences? Most provocatively, is it possible to help people make better choices, even if they are already fully informed (say about the relative merits of ice cream and salad)? I first decided to dip my toe into these waters by asking whether it was possible to help people save for retirement.

Save more tomorrow

If people are present biased, they may have trouble saving for retirement. In the language of the planner-doer model, the planner may wish to save a higher proportion of current income, but she has trouble controlling the impulsive purchase decisions of a succession of doers who are tempted by myriad opportunities to buy immediate gratification. Are there ways to give our planners a little help?

In a short paper on this subject, I made a few suggestions, including increasing withholding taxes. This would have the effect of increasing the size of tax refunds (which are already substantial in the United States), and the evidence suggests that people find it easier to save when they receive a large windfall. Another suggestion was to change the default on defined-contribution savings plans. At that time, participants had to actively opt in to join such plans. I suggested changing the default so that if people did nothing, they would be automatically enrolled. This suggestion went unnoticed, but fortunately a Booth colleague of mine at the time, Brigitte C. Madrian, published a paper a few years later with Dennis F. Shea of the UnitedHealth Group demonstrating that this policy dramatically increased enrollments in a company that tried the idea. With the help of that evidence, the idea has now spread widely.

Madrian and Shea also highlighted a potential pitfall to automatic enrollment. If the default savings rate picked by the company is too low, some people will passively accept the default rate (perhaps taking it as a suggestion) and will end up saving less than they would have if left to their own devices. This compounded an existing problem that savings rates in 401(k) plans were generally too low to support an adequate retirement nest egg, especially for baby boomers who were late getting started. A few years later, I was asked by a large mutual-fund company to speak to the clients of their retirement-plan record-keeping business about ways to increase savings rates.

After some discussions with a former student, Shlomo Benartzi, now at UCLA, I suggested a plan we later named “Save More Tomorrow.” The idea was to think about the behavioral biases that were contributing to low savings rates and a reluctance to increase them, and then use those insights to design a plan to help. We based our plan on three observations. First, people have more self-control regarding future plans than immediate behavior. (We plan to start diets next month, not tonight at dinner.) Second, people are loss averse in nominal dollars, and thus resist any reduction in their take-home pay that would happen if they immediately increased their retirement savings rate. Third, retirement savers display strong inertia. As we will see below, they can go for periods of many years without making any changes to their plan. Understanding these causes of low savings rates, we asked how we could overcome them.

The plan we devised had components to address each of the three issues listed above. First, workers were asked whether they would be interested in joining a program that would increase their savings rate “in a month or two, not today.” Second, to mitigate loss aversion, the increases in the savings rate would be timed to coincide with pay increases, so workers would never see their pay go down. Third, once the worker joined, the program would remain in place until he actively opted out or his savings rate reached some target or maximum.

This idea also went nowhere until a small company in Chicago decided to try it. They had a problem of low participation and low savings rates among their mostly low-paid workers, and hired a financial adviser to personally meet with each employee and offer advice. Since savings rates were typically quite low, the adviser, Brian Tarbox, usually suggested that they increase their annual contributions by five percentage points. Most workers turned this advice down on the grounds that they could not afford it. To these reluctant savers, Tarbox offered a version of Save More Tomorrow in which savings rates would increase 3 percentage points at each pay raise. About 80 percent of those offered this plan signed up, and after four pay raises, their savings rate had gone from 3.5 percent to 13.6 percent. (Those who accepted the advice to increase their savings rate by 5 percentage points plateaued at that new level.)

Benartzi and I presented a paper based on these and other findings at a conference held at the University of Chicago honoring my thesis advisor Sherwin Rosen, who had recently died quite prematurely. The UChicago economist Casey Mulligan, who maintains orthodox Chicago School views, was the discussant. Mulligan agreed that the results were impressive but asked a question I had not anticipated. “Isn’t this paternalism?” I stammered a bit and noted that the program was completely voluntary and thus absent any of the coercion normally associated with paternalistic policies such as Prohibition. “If this is paternalism,” I said, “it must be a different sort of paternalism. I don’t know, maybe we should call it libertarian paternalism.”

Libertarian paternalism is not an oxymoron

I mentioned this interaction to my friend and colleague at the University of Chicago Law School, Cass R. Sunstein, telling him that I thought the idea of libertarian paternalism seemed intriguing (albeit a mouthful to say). We wrote two papers on the topic, one that I drafted that was five pages and one that Cass took the lead on that was 52 pages, by far my longest paper. In fact, it was so long that it looked to me to be nearly the length of a book. At one of our lunch meetings, I may have used the word “book” carelessly in conversation, which can be dangerous when talking to Cass, who seems to be able to write books faster than I can read them. One thing led to another and, with me cruelly slowing Cass down to what he considered a snail’s pace, we eventually wrote the book.

When we were looking for a publisher for the book, we found the reaction to be rather tepid, probably in part because the phrase “libertarian paternalism” does not exactly roll off the tongue. Fortunately, one of the many publishers that declined to bid on the book suggested that the word “nudge” might be an appropriate title. And so, we published Nudge: Improving Decisions about Health, Wealth, and Happiness. In this roundabout way, a new technical term came into social-science parlance: a “nudge.”

The book Nudge is based on two core principles: libertarian paternalism and choice architecture. It is true that the phrase “libertarian paternalism” sounds like an oxymoron; but according to our definition, it is not. By “paternalism” we mean choosing actions that are intended to make the affected parties better off as defined by themselves. More specifically, the idea is to help people make the choice they would select if they were fully informed and in what Carnegie Mellon’s George Loewenstein calls a cold state, meaning unaffected by arousal or temptation. (For instance, we ask you today how many cashews you would like to eat during cocktails tomorrow.) Of course, deciding what choices satisfy this definition can be difficult, but the concept should be clear in principle. The word “libertarian” is used as an adjective to modify the word “paternalism,” and it simply means that no one is ever forced to do anything.

Choice architecture is the environment in which people make decisions. Anyone who constructs that environment is a choice architect. Menus are the choice architecture of restaurants, and the user interface is the choice architecture of smart phones. Features of the choice architecture that influence the decisions people make without changing either objective payoffs or incentives are called nudges. The example of the default option in pension plans is a now-classic example of a nudge: joining is made easier, but no one is forced to do anything. To rational economic agents (whom we call econs), it should not matter whether one of the boxes in a yes-no choice is already clicked in an online form. The cost of clicking the other box is trivial. But in a world of humans, nudges matter, and good choice architecture, like good design, makes the world easier to navigate.xii Indeed, GPS maps are a perfect illustration of libertarian paternalism in action. Users choose a destination, the map suggests a route that the user is free to reject or modify, and for those of us who are directionally challenged, we get to our desired destination with fewer unintended detours. Importantly, no choices are precluded. Both automatic enrollment (with easy opt-out) and Save More Tomorrow are nudges.

Much to our delight and surprise, nudging has become a global success. Governments, starting with Britain and later the US, have created behavioral-insight teams that explore policies informed by behavioral science and subject to rigorous tests, using randomized controlled trials wherever possible.xiii

When nudges are forever

There are many unanswered questions about the impact of nudges. One that has been difficult to evaluate is: How long do nudges last?

Take the case of a default option. At one extreme, one could imagine that the effect of the default is fleeting, as people learn relatively quickly that the default option is not to their liking. This would likely be the case when feedback is direct and immediate, such as when the default side dish at a frequently visited restaurant is one you dislike, and you ask for an alternative. At the other extreme, one can imagine defaults lasting years, perhaps decades. This scenario is plausible when there is little or no feedback, or when the feedback is infrequent.

A recent project with University of Miami’s Henrik Cronqvist and China Europe International Business School’s Frank Yu investigates this question, coincidentally in a Swedish context, namely the Premium Pension Plan that was introduced by the Swedish government in 2000. Briefly, this initiative created a defined contribution component to the Swedish social-security system in which a portion of the payroll tax (2.5 percent of income) was assigned to be invested in the stock market. Citizens were given over 450 mutual funds to choose from in forming their individual portfolio. At the launch, the choice architecture employed two powerful nudges. First, a default fund was named. Anyone who failed to make an active choice was assigned to this fund, and investors could also actively choose it. Second, the government launched a large advertising campaign urging citizens to decline the default fund and form their own portfolio instead. Additionally, individual funds could and did advertise as well, trying to convince investors to pick their fund.

As reported in an earlier paper, in this battle of the nudges, the advertising campaign won. One-third of investors chose the default fund, while two-thirds decided to manage their own portfolios. But in the years after the launch, new entrants to the workforce, primarily young people and immigrants, have faced the same set of choices but without the second nudge to choose for themselves. In these subsequent years, the government ceased its advertising campaign urging do-it-yourself portfolio management, and private-sector advertising also dried up, since the number of people choosing had greatly diminished. The share of new entrants choosing the default fund immediately rose, and in recent years has been nearly 99 percent. Nudges can be powerful.

But here is the interesting question we can now answer: What happened to all those folks who were nudged to choose their own portfolios back in 2000? Remarkably, 97 percent are still “managing” their portfolios themselves. I use quotation marks for the word “managing” because they are not very active. Less than 10 percent of these investors make a trade in an average year. So while nearly everyone who joins the system now is invested in the default fund, nearly everyone who was nudged to eschew that fund 17 years ago, in favor of a do-it-yourself strategy, is sticking with that original “choice” and doing very little portfolio rebalancing.

The investors in the default fund are, perhaps unsurprisingly, equally passive. The default fund is essentially a global index fund with low fees, so investors can hardly be questioned for sticking with this sensible option. But in 2010, the managers of the fund received permission to add leverage, and in 2011 they decided to take advantage of that freedom to employ 50 percent leverage, meaning investors in the fund essentially had a 150 percent exposure to the market. To put this in some perspective, had this strategy been in place during the financial crisis, the value of the portfolio would have fallen by 82 percent. This radical change in the fund seems to have gone unnoticed by nearly all investors. Although over 4 million people were (and still are) invested in this fund, the number reacting to the drastic (and in retrospect either lucky or brilliant) change in strategy was tiny. Again, people stick with defaults, even when the default changes dramatically.

In hindsight, these findings are not so surprising. Many people put their retirement savings on autopilot (perhaps wisely), so this domain is a perfect storm in which to expect strong elements of inertia. This will not always be the case, but when it is, choice architects need to be especially wary about their choice of nudges. As Sunstein and I frequently stress, there is often no alternative to nudging. The designers of this retirement savings plan had to choose some choice architecture—if not this one, another, and any plan will have its potential pitfalls.xiv

Conclusion

Behavioral economics has come a long way from my initial set of stories. Behavioral economists of the current generation are using all the modern tools of economics, from theory to big data to structural models to neuroscience, and they are applying those tools to most of the domains in which economists practice their craft. This is crucial to making descriptive economics more accurate. As the last section of this lecture highlighted, they are also influencing public-policy makers around the world, with those in the private sector not far behind. Sunstein and I did not invent nudging—we just gave it a word. People have been nudging as long as they have been trying to influence other people.

And much as we might wish it to be so, not all nudging is nudging for good. The same passive behavior we saw among Swedish savers applies to nearly everyone agreeing to software terms, or mortgage documents, or car payments, or employment contracts. We click “agree” without reading, and can find ourselves locked into a long-term contract that can only be terminated with considerable time and aggravation, or worse. Some firms are actively making use of behaviorally informed strategies to profit from the lack of scrutiny most shoppers apply. I call this kind of exploitive behavior “sludge.” It is the exact opposite of nudging for good. But whether the use of sludge is a long-term profit-maximizing strategy remains to be seen. Creating the reputation as a sludge-free supplier of goods and services may be a winning long-term strategy, just like delivering free bottles of water to victims of a hurricane.

Although not every application of behavioral economics will make the world a better place, I believe that giving economics a more human dimension and creating theories that apply to humans, not just econs, will make our discipline stronger, more useful, and undoubtedly more accurate. And just as I am far from the first behavioral economist to win the Nobel Prize,xv I will not be the last.

Richard H. Thaler is Charles R. Walgreen Distinguished Service Professor of Behavioral Science and Economics at Chicago Booth and was the recipient of the 2017 Nobel Memorial Prize in Economic Sciences. This essay is adapted from his Nobel Prize Lecture, given December 8, 2017, in Stockholm, and is printed with permission from the Nobel Foundation. © The Nobel Foundation 2017

i I should say that this article is not a transcription of the lecture I delivered in Stockholm during Nobel week. There are two reasons for this. One is simple procrastination. The talk was on December 9, 2017. The written version was not “due” until January 31, 2018, which is surely an aspiration rather than a real deadline. Why do something now that you can put off until later? The other reason is more substantive. Talks and articles are different media. Readers interested in seeing the actual lecture can find it at Nobelprize.org. [return]

ii This article is not intended to be comprehensive. My recent book Misbehaving: The Making of Behavioral Economics (Thaler, 2015) is more thorough, both in terms of contents and especially references. [return]

iii Both prospect theory and the planner-doer model are examples of what might be called minimal departures from neoclassical economic theory. Both theories retain maximization, for example, as a useful simplifying assumption. This is intentional. Matthew Rabin, the most important behavioral-economics theorist of his generation, has advocated creating what he calls PEEMs: portable extensions of existing models (2013). The beta-delta model, pioneered by David Laibson (1997) and O’Donoghue and Rabin (1999) is a leading example. It has been a more widely adopted model of self-control than the planner-doer, in part because it is more easily “portable.” [return]

iv That is not to say that there is not a problem with “leakage.” For example, some 401(k) plans allow participants to borrow against their account. Also, when people change jobs, retirement accounts are often cashed out if the total is relatively small. [return]

v The same thinking applies to security purchases. Investors tend to sell winners more quickly than losers, although there are (at least in the US) tax benefits to selling the losers. See Shefrin and Statman (1985) and Odean (1998). [return]

vi This hypothesis was supported at high stakes using choices from the game show Deal or No Deal. See Post et al. (2008). [return]

vii This experiment also showed that neither income effects nor transaction costs were significant in this market. [return]

viii There is a large literature that followed the publication of these two papers. For a summary of the behavioral view on how to interpret excess returns to value stocks see Lakonishok, Shleifer, and Vishny (1994). For the efficient-markets view, see (for one example) Fama and French (1993). [return]

ix It might be possible to argue that 3Com managers were holding Palm back, but then the right thing to do would be to get rid of management, not sell off Palm. In any case, the really strange behavior is what comes next. [return]

xi A recent paper by DellaVigna and Gentzkow (2017) finds similar evidence of poor firm decision-making in retail pricing. [return]

xii In writing Nudge, we were strongly influenced by Don Norman’s (1988) classic book, The Psychology of Everyday Things. (In later editions, the title was changed to The Design of Everyday Things.) [return]

xiii For progress reports, see Halpern (2015), Benartzi et al. (2017), and Szaszi et al. (2017) [return]

xiv For a thoughtful discussion of how to go about choosing nudges to make people better off “as judged by themselves,” see Sunstein (2017). [return]

xv Some of the former winners who made important contributions to the field include (in the order in which they were awarded the prize): Arrow, Allais, Sen, McFadden, Akerlof, Kahneman, Schelling, Ostrom, Diamond, Roth, Shiller, Tirole, and Deaton. [return]

- Nicholas C. Barberis, “Thirty Years of Prospect Theory in Economics: A Review and Assessment,” Journal of Economic Perspectives, Winter 2013.

- Gary S. Becker and Kevin M. Murphy, “A Theory of Rational Addiction,” Journal of Political Economy, August 1988.

- Shlomo Benartzi, John Beshears, Katherine L. Milkman, Cass R. Sunstein, Richard H. Thaler, Maya Shankar, Will Tucker-Ray, William J. Congdon, and Steven Galing, “Should Governments Invest More in Nudging?” Psychological Science, June 2017.

- Victor L. Bernard and Jacob K. Thomas, “Post-Earnings-Announcement Drift: Delayed Price Response or Risk Premium?” Journal of Accounting Research, Spring 1989.

- Colin F. Camerer, “Prospect Theory in the Wild: Evidence from the Field,” in Choices, Values and Frames, eds. Daniel Kahneman and Amos Tversky, New York: Cambridge University Press, September 2000.

- Gary Charness and Matthew Rabin, “Social Preferences: Some Simple Tests and a New Model,” University of California at Berkeley mimeo, June 2000.

- Nai-Fu Chen, Raymond Kan, and Merton H. Miller, “Are the Discounts on Closed-End Funds a Sentiment Index?” Journal of Finance, June 1993.

- Ronald H. Coase, “The Problem of Social Cost,” Journal of Law & Economics, October 1960.

- Henrik Cronqvist and Richard H. Thaler, “Design Choices in Privatized Social-Security Systems: Learning from the Swedish Experience,” American Economic Review, May 2004.

- Henrik Cronqvist, Richard H. Thaler, and Frank Yu. “When Nudges Are Forever: Inertia in the Swedish Premium Pension Plan,” American Economic Review, forthcoming.

- Werner F. M. De Bondt and Richard H. Thaler, “Further Evidence on Investor Overreaction and Stock Market Seasonality,” Journal of Finance, July 1987.

- Stefano DellaVigna and Matthew Gentzkow, “Uniform Pricing in US Retail Chains,” Working paper, August 22, 2017.

- James Estrin, “Kodak’s First Digital Moment,” in Lens (blog), New York Times, August 12, 2015.

- Eugene F. Fama, “Efficient Capital Markets: A Review of Theory and Empirical Work,” Journal of Finance, May 1970.

- Eugene F. Fama and Kenneth R. French, “Common Risk Factors in the Returns on Bonds and Stocks,” Journal of Financial Economics, February 1993.

- Ernst Fehr and Klaus M. Schmidt, “A Theory of Fairness, Competition, and Cooperation,” Quarterly Journal of Economics, August 1999.

- Werner Güth, Rolf Schmittberger, and Bernd Schwarze, “An Experimental Analysis of Ultimatum Bargaining,” Journal of Economic Behavior & Organization, December 1982.

- David Halpern, Inside the Nudge Unit: How Small Changes Can Make a Big Difference, London: Ebury Publishing, August 2015.

- Chip Heath and Jack B. Soll, “Mental Budgeting and Consumer Decisions,” Journal of Consumer Research, June 1996.

- Michael Jensen, “Some Anomalous Evidence Regarding Market Efficiency,” Journal of Financial Economics, September 1978.

- Daniel Kahneman and Amos Tversky, “On the Psychology of Prediction,” Psychological Review, July 1973.

- ———, “Prospect Theory: An Analysis of Decision Under Risk,” Econometrica, March 1979. “The value function” diagram reproduced with permission of Blackwell Publishing Ltd. via Copyright Clearance Center.

- Daniel Kahneman, Jack L. Knetsch, and Richard H. Thaler, “Experimental Tests of the Endowment Effect and the Coase Theorem,” Journal of Political Economy, December 1990.

- Jack L. Knetsch and John A. Sinden, “Willingness to Pay and Compensation Demanded: Experimental Evidence of an Unexpected Disparity in Measures of Value,” Quarterly Journal of Economics, August 1984.

- Botond Koszegi and Matthew Rabin, “A Model of Reference-Dependent Preferences,” Quarterly Journal of Economics, November 2006.

- ———, “Reference-Dependent Risk Attitudes,” American Economic Review, September 2007.

- David Laibson, “Golden Eggs and Hyperbolic Discounting,” Quarterly Journal of Economics, May 1997.

- Josef Lakonishok, Andrei Shleifer, and Robert W. Vishny, “Contrarian Investment, Extrapolation, and Risk,” Journal of Finance, December 1994.

- Owen A. Lamont and Richard H. Thaler, “Can the Market Add and Subtract? Mispricing in Tech Stock Carve-Outs,” Journal of Political Economy, April 2003.

- Charles M. C. Lee, Andrei Shleifer, and Richard H. Thaler, “Investor Sentiment and the Closed-End Fund Puzzle,” Journal of Finance, March 1991.

- George Loewenstein, “Out of Control: Visceral Influences on Behavior,” Organizational Behavior and Human Decision Processes, March 1996.

- Brigitte C. Madrian and Dennis F. Shea, “The Power of Suggestion: Inertia in 401(k) Participation and Savings Behavior,” Quarterly Journal of Economics, November 2001.

- Cade Massey and Richard H. Thaler, “The Loser’s Curse: Overconfidence vs. Market Efficiency in the National Football League,” Management Science, April 2005.

- Don A. Norman, The Psychology of Everyday Things, New York: Basic Books, June 1988.

- Terrance Odean, “Are Investors Reluctant to Realize Their Losses?” Journal of Finance, October 1998.

- Ted O’Donoghue and Matthew Rabin, “Doing It Now or Later,” American Economic Review, March 1999.

- Thierry Post, Martijn J. van den Assem, Guido Baltussen, and Richard H. Thaler, “Deal or No Deal? Decision Making under Risk in a Large-Payoff Game Show,” American Economic Review, March 2008.

- Matthew Rabin, “Incorporating Fairness into Game Theory and Economics,” American Economic Review, December 1993.

- Matthew Rabin, “An Approach to Incorporating Psychology into Economics,” American Economic Review, May 2013.

- David Romer, “Do Firms Maximize? Evidence from Professional Football,” Journal of Political Economy, April 2006.

- Paul A. Samuelson, “A Note on the Pure Theory of Consumer’s Behavior,” Economica, February 1938.

- Hersh M. Shefrin and Meir Statman, “The Disposition to Sell Winners Too Early and Ride Losers Too Long: Theory and Evidence,” Journal of Finance, July 1985.

- Hersh M. Shefrin and Richard H. Thaler, “The Behavioral Life-Cycle Hypothesis,” Economic Inquiry, October 1988.

- Andrei Shleifer and Robert W. Vishny, “The Limits of Arbitrage,” Journal of Finance, March 1997.

- Adam Smith, Theory of Moral Sentiments, London: A. Miller, April 1759.

- Vernon Smith, “Experimental Economics: Induced Value Theory,” American Economic Review, May 1976.

- Barry M. Staw, “Knee-Deep in the Big Muddy: A Study of Escalating Commitment to a Chosen Course of Action,” Organizational Behavior and Human Performance, June 1976.

- Cass R. Sunstein, “‘Better Off, as Judged by Themselves’: A Comment on Evaluating Nudges,” International Review of Economics, April 2017.

- Cass R. Sunstein and Richard H. Thaler, “Libertarian Paternalism Is Not an Oxymoron,” University of Chicago Law Review, Fall 2003.

- Barnabas Szaszi, Anna Palinkas, Bence Palfi, Aba Szollosi, and Balazs Aczel, “A Systematic Scoping Review of the Choice Architecture Movement: Toward Understanding When and Why Nudges Work,” Journal of Behavioral Decision Making, December 2017.

- Richard H. Thaler, “Mental Accounting and Consumer Choice,” Marketing Science, Summer 1985.

- ———, “Mental Accounting Matters,” Journal of Behavioral Decision Making, September 1999.

- ———, Misbehaving: The Making of Behavioral Economics, New York: W. W. Norton & Company, May 2015.

- ———, “Psychology and Savings Policies,” American Economic Review, May 1994.

- Richard H. Thaler and Shlomo Benartzi, “Save More TomorrowTM: Using Behavioral Economics to Increase Employee Saving,” Journal of Political Economy, February 2004.

- Richard H. Thaler and Eric J. Johnson, “Gambling with the house money and trying to break even: The effects of prior outcomes on risky choices,” Management Science, June 1990.

- Richard H. Thaler and Sherwin Rosen, “The Value of Saving a Life: Evidence from the Labor Market,” in Household Production and Consumption, ed. Nestor E. Terleckyj, New York: National Bureau of Economic Research, May 1976.

- Richard H. Thaler and Hersh M. Shefrin, “An Economic Theory of Self-Control,” Journal of Political Economy, April 1981.

- Richard H. Thaler and Cass R. Sunstein, “Libertarian Paternalism,” American Economic Review, May 2003.

- ———, Nudge: Improving Decisions about Health, Wealth and Happiness, London: Penguin, April 2008.

- Amos Tversky and Daniel Kahneman, “Judgment under Uncertainty: Heuristics and Biases,” Science, September 1974.

Your Privacy

We want to demonstrate our commitment to your privacy. Please review Chicago Booth's privacy notice, which provides information explaining how and why we collect particular information when you visit our website.